Do NOT follow this link or you will be banned from the site!

Feed aggregator

454 Hints That a Chatbot Wrote Part of a Biomedical Researcher’s Paper

Scientists show that the frequency of a set of words seems to have increased in published study abstracts since ChatGPT was released into the world.

Tesla Sales Fall as Elon Musk Focuses on Self-Driving Cars

The company has devoted resources to autonomous driving rather than developing new models to attract car buyers.

Scientist Use A.I. To Mimic the Mind, Warts and All

To better understand human cognition, scientists trained a large language model on 10 million psychology experiment questions. It now answers questions much like we do.

Confused by a mysterious update that's suddenly appeared on your Windows 10 PC? Don't panic – here's what you need to know

- Windows 10 PCs are getting an update stealthily installed

- The under-the-radar arrival of KB5001716 may confuse some folks

- This patch is deployed to versions of Windows which are about to run out of support, and it'll nudge you to make a move to keep your PC secure

Windows 10 PCs are getting an update stealthily piped to them, and some folks may be confused as to what it is.

The good news is that it's nothing to worry about as such – although the update is a bit of an oddity, and it does herald the end of Windows 10 (I'll come back to why momentarily).

Neowin noticed the arrival of the patch labelled KB5001716, and observed that this is an upgrade that Microsoft deploys ahead of 'force-installing' a new feature update.

That's somewhat dramatic phrasing for KB5001716 being pushed to Windows versions which are about to run out of support – hence the users will indeed need to upgrade soon, or they'll be left without security updates (and potentially open to vulnerabilities that could be exploited as a result).

So technically, the comment about a forced installation is true, but only because the upgrade is a necessary move to ensure the safety of the host PC.

Here's what Microsoft tells us about this patch: "After this update is installed, Windows may periodically display a notification informing you of problems that may prevent Windows Update from keeping your device up-to-date and protected against current threats. For example, you may see a notification informing you that your device is currently running a version of Windows that has reached the end of its support lifecycle."

In this case, the update is being quietly installed on PCs running the latest version of Windows 10, which is 22H2, as well as 21H2 – the latter is already out of support, mind you. (As is Windows 11 21H2, which Microsoft also lists as receiving this patch currently, rather oddly).

Analysis: the beginning of the end

What this really represents is Microsoft preparing the ground for the end of Windows 10, which happens in October of this year. With this patch now being installed on all Windows 10 PCs, as noted, those machines will receive periodic notifications warning that the operating system is about to run out of support (and security updates).

Microsoft is keeping something of a tight rein on those nudges (which will doubtless mention upgrading to Windows 11), though. The company notes that they "will respect full screen, game, quiet time and focus assist modes" meaning that they won't be overly intrusive. And hopefully they won't be too regular, either.

So, if you've been worried about the appearance of this update, there's no need to fret. It's not a big deal, although that said, it is in some respects, in terms of the fuse effectively being lit for the final countdown with Windows 10.

There are only three months left to go before the End of Life of the older OS now, and so there is some urgency to act. If you can't upgrade to Windows 11 due to falling short of the system requirements, you need to be thinking about alternatives (or getting an extra year of support).

I think it would really help if Microsoft was clearer about what this update is. In fact, KB5001716 is rolled out to PCs every time a support deadline for a version of Windows is imminent. Indeed, in the past, we have seen reports of this patch failing to install because it's already present on the system.

Strictly speaking, that shouldn't happen – due to the patch only being pushed out to versions of Windows that are at death's door, as noted – but if it does somehow, the solution is simple. Uninstall the existing copy of KB5001716 in Windows Update, and the new one should then patch successfully. Otherwise, it'll keep repeatedly failing, which will doubtless get tiresome quite swiftly.

Overall, this is a somewhat odd approach from Microsoft for managing dying Windows versions. It's not surprising that KB5001716 can cause some confusion, in terms of the stealthy, and repeated (over the years), installation of this 'update for Windows Update' as the company bills it.

You might also like...- No, Windows 11 PCs aren't 'up to 2.3x faster' than Windows 10 devices, as Microsoft suggests – here's why that's an outlandish claim

- macOS Tahoe 26: here's everything you need to know about all the new features

- Can’t upgrade to Windows 11? This Linux project wants to save your old PC from the scrapheap when Windows 10 support ends

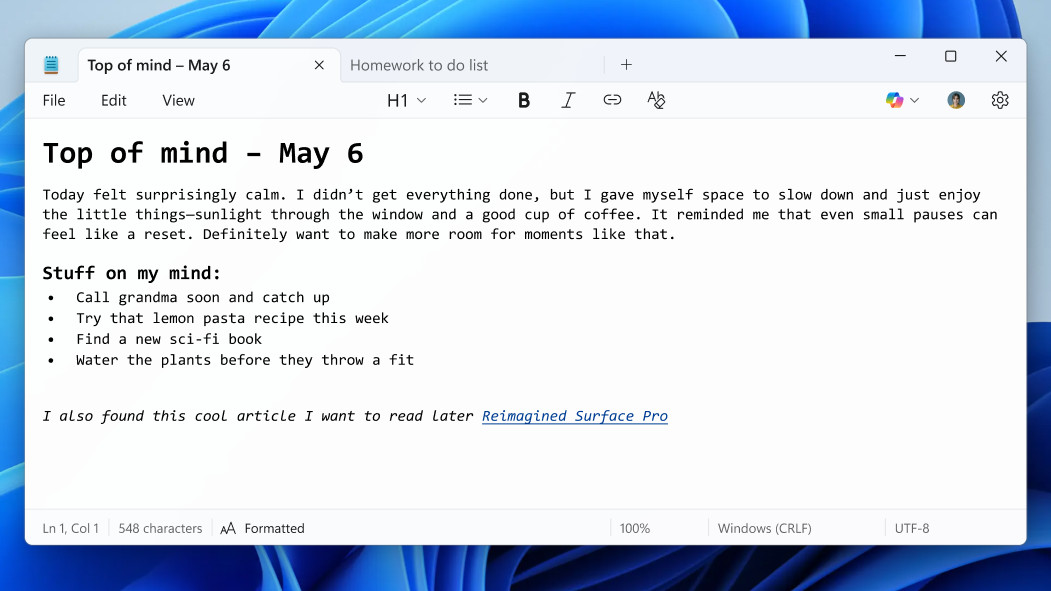

Microsoft just gave the Windows 11 Notepad app a controversial feature that people are either loving or hating

- New formatting abilities have arrived in Notepad for Windows 11

- Microsoft previously tested these features, but they're now rolling out to all Windows 11 users

- Some welcome the move as a useful addition to Notepad's editing powers, while others feel Microsoft is just bloating the text editor

Windows 11's Notepad app has been fleshed out with new formatting powers, and the app is morphing, slowly but surely, to become more like WordPad (the more extensive text editor that Microsoft canned quite some time ago).

Windows Latest reports that it downloaded an update for Notepad from the Microsoft Store which provided the new functionality to a PC running the finished version of Windows 11 (as opposed to test builds, where these features were previously being trialled).

The fresh formatting powers include the ability to add different kinds of headings or subheadings, use italics or bold text, and create numbered or bullet-point lists. It's also now possible to add hyperlinks to selected text.

Others on Reddit have been sharing their opinions on the introduction of these new lightweight formatting abilities over the past few days, so it seems the rollout is definitely underway.

So how's the new Notepad being received so far? To say it's had a mixed reception, going by the reactions on Reddit, is an understatement.

Analysis: bolstered or bloated?

The polarization in the feedback to these changes is quite something. There are two camps, as you might guess: those who welcome the move, and those who, well, very much don't.

The welcomers are generally folks who miss WordPad, which Microsoft sent to the great app graveyard in the sky early last year. WordPad was essentially a midway point between Microsoft Word, the firm's full-on word processor, and Notepad, which was originally conceived as a super-lightweight text editor for duties in a pinch.

With that compromise app now gone, what Microsoft has been doing for a while now is adding more features to Notepad in order to make up for the loss of WordPad. This latest move to usher in basic formatting tricks bolsters Notepad considerably in terms of its editing clout, and a fair few people are happy about that as a result.

The folks who feel otherwise are concerned that all Microsoft is doing is bloating Notepad. Bear in mind that this introduction of formatting powers is the latest in a long line of additions, and the fear is that eventually Notepad is going to become more bloated, and perhaps less responsive or even slower to start – which defeats the whole point of the app as a quick and easy editor.

However, there's a crucial point to remember here, namely that the new formatting features can all be switched off. If you don't want them, Microsoft has made it so that support for formatting can be dispensed with in a single click, which should go some way to placating some of the haters – even if they still won't approve of the general direction Microsoft is taking with Notepad, which doesn't appear to be a course the software giant is planning on altering.

If you've got the new version of Notepad and have noticed the formatting functionality, and you're wondering how to switch it off, that's easy. At the bottom of the app window you'll see it says 'Formatted' to indicate that formatting is active – just click on that, and all formatting will be disabled.

You might also like...- No, Windows 11 PCs aren't 'up to 2.3x faster' than Windows 10 devices, as Microsoft suggests – here's why that's an outlandish claim

- macOS Tahoe 26: here's everything you need to know about all the new features

- Can’t upgrade to Windows 11? This Linux project wants to save your old PC from the scrapheap when Windows 10 support ends

How to Organize Your E-Books on Kindle, Apple and Google and Nook

If the e-book app on your phone or tablet is overflowing and full of outdated files, use these tools to tidy it up.

Solar Industry Says Republican Policy Bill Would Cede Production to China

A revival of U.S. solar panel manufacturing that began during the first Trump administration could end with the phasing out of tax incentives for clean energy.

Google Gemini is set to become a significantly better phone assistant thanks to these two small upgrades

- Google Gemini can more easily access your Phone and Messages apps

- Previously, you had to allow Gemini Apps Activity, reducing your privacy

- That's no longer the case, and comes as Gemini RCS support leaks too

Google looks set to give Google Gemini some serious upgrades by allowing it greater access to your apps without forcing you to tweak your privacy settings, and giving it new abilities within those apps.

You might have seen an email that Google sent to Android phone users stating that Gemini will be able to interact with the Phone, Messages, WhatsApp, and Utilities apps, even if you have Gemini Apps Activity switched off.

The immediate reaction was one of concern, but this is actually a huge privacy win.

With Gemini Apps Activity switched on, Google can see an activity log of how you use Gemini – personal data it can then use to develop its products further. If you want to keep this data more private, you had to lose out on Gemini being able to access extensions, which allow it to perform actions using other apps, such as texting someone if you ask it to.

This change means you can keep your activity log private while still not losing out on these basic smart assistant features, which Google’s Assistant has had for years.

Though that’s not to say Google won’t store any of your Gemini activity even if this setting is off. Google admits it will store some activity data for at most 72 hours. It’s stored for 24 hours within Gemini so that the AI can respond to your conversations contextually. The longer limit is for security and safety reasons, which you can find out more about on Google’s support page.

RCS support incoming?Perhaps in preparation for Gemini having easy access to Messages – and so presumably more people using the app – Gemini is getting an RCS upgrade too, apparently.

That’s based on Android Authority’s analysis of the latest Google app files, which hints at RCS coming to Gemini because the AI can fetch the device’s RCS capabilities. This would only be necessary if the AI were RCS compatible.

This is significant because currently, Gemini’s inability to use RCS means it’s unable to send or play audio, images, or video through the Google Messages app. With access to this messaging standard, that could very quickly change.

As with similar leaks, there’s no guarantee that Gemini will get RCS support any time soon (or at all), but it certainly makes plenty of sense as an upgrade, so it’s one we’ll be keeping our eye out for.

You might also like'It's obvious that users are frustrated': consumer rights group accuses Microsoft of not providing a 'viable solution' for Windows 10 users who can't upgrade to Windows 11

- Microsoft recently threw a lifeline to consumers, offering alternatives to paying $30 for extended support for Windows 10

- PIRG thinks this doesn't go far enough in terms of avoiding an impending e-waste calamity

- The organization suggests Microsoft considers providing longer-term support for Windows 10, or relaxes the spec requirements for Windows 11

Microsoft's recent lifeline to help those stuck on Windows 10 – due to not meeting the stricter hardware requirements for a Windows 11 upgrade – simply isn't enough, according to a consumer rights group.

The Register reports that it has spoken to Lucas Rockett Gutterman, who leads the Designed to Last campaign for the Public Interest Research Group (PIRG) in the US.

As you may be aware, PIRG has a mission to combat obsolescence and e-waste. The organization has previously levelled accusations at Microsoft of its Windows 11 requirements effectively shoving hundreds of millions of otherwise perfectly serviceable PCs into landfill, come the end of Windows 10 in October 2025. (The organization isn't alone in that, either.)

You may have seen that last week, Microsoft made a concession on this front. We've long known that one option for consumers will be to pay $30 for an additional year of security updates (something that's never been offered before), but now Microsoft just introduced some other choices too.

Instead of forking out cash, you can elect to use the Windows Backup app to sync all your settings to the cloud (OneDrive). Alternatively, you can redeem 1,000 Microsoft Rewards points.

However, Gutterman remains distinctly unimpressed with the new choices, telling The Register that: "Microsoft's new options don't go far enough and likely won't make a dent in the up to 400 million Windows 10 PCs that can't upgrade to Windows 11."

Gutterman adds that: "What [Microsoft hasn't] done is commit to automatically providing longer support for Windows 10 or loosening the hardware requirements for Windows 11."

"It's obvious that users are frustrated," Gutterman concludes. "They feel yanked around and don't think this [latest] announcement provides a viable solution."

Analysis: thinking beyond a one-year extension

To be fair to Microsoft, I think that giving Windows 10 users a couple of new options to avoid paying $30 to keep security updates going for an extra year (through to October 2026) is actually a very positive move – especially because simply using the Backup app isn't a particularly hefty imposition.

I can see where Gutterman is coming from with the points he makes, but the suggestion that Microsoft might consider loosening the system requirements for Windows 11 is, I feel, rather a waste of breath. That isn't going to happen at this point, and I think the software giant has been pretty clear on that.

For me, the key point raised is providing Windows 10 support beyond an extra year for consumers, and this is something I've been harping on for some time. While businesses can get a three-year program of extended security updates (if they want that much), so far Microsoft is only offering consumers a single year.

Perhaps the software giant feels that this is enough, but it really isn't – not when it comes to keeping all those olds PCs off the scrapheap. Why isn't Microsoft looking at extending support for multiple years for consumers too, from an eco-friendly angle?

Just a second additional year of support would be some welcome extra breathing room, even if Microsoft charged for it rather than offering any kind of alternative angle like using the Backup app. Of course, a non-paying option would be better. I'd even suggest making Windows 10 ad-supported to keep those security updates coming for two or three years.

What do you mean that's already happened and ads are all over the place? Ahem – in all seriousness, I think allowing Microsoft to push more ad notifications (in a still limited fashion) within Windows 10 would be a compromise many would take, rather than paying extra to keep their non-Windows 11 compatible PC alive through to 2027 or 2028. At least suffering the adverts would have a plus side to it in this scenario, and if you can't stand the idea of yet more ads, you can stump up the $30.

Whatever the case, I fully agree with PIRG that a one-year extension for consumers isn't good enough in terms of Microsoft's responsibilities towards preventing excessive e-waste – and hopefully the company will see the sense in further extended updates for consumers, too, not just businesses.

You might also like...- No, Windows 11 PCs aren't 'up to 2.3x faster' than Windows 10 devices, as Microsoft suggests – here's why that's an outlandish claim

- Windows 11's hidden PC migration feature proves Microsoft isn't messing around when it comes to killing off Windows 10

- Can’t upgrade to Windows 11? This Linux project wants to save your old PC from the scrapheap when Windows 10 support ends

Defeat of a 10-Year Ban on State A.I. Laws Is a Blow to Tech Industry

All but a handful of states have some laws regulating artificial intelligence.

Mark Zuckerberg goes all-in on AI and might even beat Sam Altman and OpenAI to superintelligence

- Zuckerberg has created a new group called Meta Superintelligence Labs

- The goal of the group is to create AI superintelligence

- New hires from OpenAI will form the team headed up by Alexandr Wang from Scale AI

Mark Zuckerberg, CEO of Meta, has heated up the race towards AI superintelligence by restructuring the company’s artificial intelligence division with the main aim being to develop artificial superintelligence, that is, intelligence that is far beyond what humans are capable of.

Superintelligence could mean exponential leaps in medicine, science, and technology that dramatically change the course of humanity, but it doesn't come without risks.

In an memo to employees, Zuckerberg said he is creating a new group called Meta Superintelligence Labs, lead by Alexandr Wang, former CEO of data-labeling startup Scale AI, which Meta has recently acquired for $14.3 billion.

A new era for humanityAs reported by Bloomberg, the memo sent by Zuckerberg reads: “As the pace of AI progress accelerates, developing superintelligence is coming into sight. I believe this will be the beginning of a new era for humanity, and I am fully committed to doing what it takes for Meta to lead the way.”

The memo goes on to list 11 recent hires to the new division, which include ex-employees of OpenAI who worked on the last 12 months of OpenAI products, along with employees from Anthropic and Google.

The list includes Trapit Bansal, who pioneered RL on chain of thought and co-creator of o-series models at OpenAI, Shuchao Bi, co-creator of GPT-4o voice mode and o4-mini, and Huiwen Chang, co-creator of GPT-4o's image generation, who previously invented MaskIT and Muse text-to-image architectures at Google Research.

Sam Altman, CEO of OpenAI, the makers of ChatGPT, has repeatedly posted about achieving superintelligence being the goal for his company on his blog.

As recently as June, he wrote, “We are past the event horizon; the takeoff has started. Humanity is close to building digital superintelligence, and at least so far it’s much less weird than it seems like it should be.”

However, it appears that Zuckerberg wants Meta to be the company that first claims to have achieved superintelligence, and he is certainly throwing an awful lot of money at this project, which has led some to question whether this is really the right approach and if achieving superintelligence is even possible.

Mark Zuckerberg paid $14.3 billion for Scale AI just to hire Alexandr Wang, and has even been offering OpenAI employees $100 million to join Meta, according to a report in the New York Times.

Zuckerberg’s new push towards superintelligence comes after Meta’s own Chief AI Scientist, Yann Le Cun, talking about superintelligence said publicly last month that “It’s not going to happen within the next two years, there is no way in hell”, and cast doubt upon the whole idea of scaling up existing LLM models, like Meta AI or ChatGPT, to achieve superintelligence, which is the approach that companies like OpenAI seem to be following.

Meta AI is currently available inside all the Meta social media apps, like Facebook, WhatsApp, and Instagram, and can also be used within the new Meta AI app.

You might also likeNo, Windows 11 PCs aren't 'up to 2.3x faster' than Windows 10 devices, as Microsoft suggests – here's why that's an outlandish claim

- Microsoft has been boasting about the benefits of Windows 11 (again)

- It claims Windows 11 PCs can be 'up to 2.3x faster' than Windows 10 machines

- This comparison was made running the operating systems on different hardware, though, and it's very misleading as a result

It likely hasn't escaped your attention that Microsoft is busy trying to drive people to switch from Windows 10 to Windows 11, what with the older operating system nearing its End of Life.

In a recent blog post (noticed by Tom's Hardware), Microsoft has again been extolling the virtues of Windows 11 (and Copilot+ PCs), with Yusuf Mehdi (who heads up consumer marketing) presenting us with an array of reasons as to why the newer OS is the place to be.

The post touches on the benefits of Windows 11, including accessibility features, AI, security, and performance, and it's the latter we're focusing on here. Specifically, the following claim that Mehdi makes: "Windows 11 PCs are up to 2.3x faster than Windows 10 PCs."

That statement comes with a footnote attached – a vital caveat, in fact, which I'll come back to, but the broad suggestion here is very clear: Windows 11 is much faster than Windows 10. But is that true, or fair, to say? No, it isn't; so let's explore why, and consider the ins and outs of what Microsoft is asserting here.

What Microsoft is claimingMicrosoft argues that Windows 11 is "faster and more efficient" and that compared to Windows 10 it delivers faster updates, quicker wake-from-sleep times (for laptops), and generally better performance on the desktop. Then comes the central claim that Windows 11 computers are "up to 2.3x faster" than older Windows 10 machines.

Notice the use of "older" there, and this is where the footnote comes into play. Scan down to that and you'll see that Microsoft says it's basing this estimation on a Geekbench 6 multi-core (CPU) benchmark – and that's all we're told in this post.

However, we're presented with a URL tacked on the end of the footnote, which is actually the source of this benchmark – an array of 'Windows 11 PC performance details' nestling within Microsoft's Learn portal.

Here we learn that these comparative tests weren't run on the same PC, but different hardware. In short, the Windows 10 PCs running the Geekbench benchmark were older machines with Intel Core 6th, 8th and 10th-gen processors, whereas the Windows 11 devices used Intel Core 12th and 13th-gen CPUs. As Microsoft further notes: "Performance will vary significantly by device and with settings, usage and other factors."

There's a big problem here which is plain to see – those much older PCs (with in some cases 6th-gen CPUs) are clearly going to be way slower than a modern machine with a 12th or 13th-gen Intel chip.

What Microsoft should have done is to run the Windows 10 benchmark on a PC, then installed Windows 11 on the same hardware, and compared the results. That would be a level playing field – what we see here absolutely isn't.

In short, that "up to 2.3x faster" claim is very misleading, because it's mostly showing the difference between the hardware components involved, not the software (the operating systems themselves). That's why the performance gulf seems so large and eyebrow-raising.

Also, it's a weak argument to just cite a single benchmark (Geekbench) anyway. Preferably, we should have a few performance tests averaged (or a whole suite of them ideally). Otherwise, the suspicion is that the benchmark in question has been cherry-picked to make the marketing look as compelling as possible.

That's a relatively minor issue, of course, compared to the fundamental problem here of comparing vastly different PCs running Windows 10 versus the computers with Windows 11 installed.

Okay, so Microsoft could argue that this is only meant as an illustrative performance metric, and it does specify that Windows 11 PCs can be "up to" more than twice as fast as Windows 10 machines – so technically that's true. In most cases, they won't be anywhere near that, though; and as already noted, not having an apples-to-apples performance comparison here just makes the assertion so misleading.

What I'm also unhappy with is the way that footnote is written. It's crafted so that even if the reader glances at it – and many probably won't – they'll just think "Oh it's Geekbench" and probably leave it at that, maybe not investigating the provided URL (featuring the actual test details). Notably, that URL isn't hyperlinked, so it's easy to miss, and you have to actually highlight and copy it to head over to the Learn portal to see the story behind the OS comparison. It all feels a bit smoke-and-mirrors to say the least.

Marketing is marketing, of course, and as noted the statement is technically true – a Windows 11 PC can no doubt be more than twice as fast as a Windows 10 PC. Especially if that Windows 10 computer has been pulled out of your attic, had the dust blown off it, and then runs a benchmark that's subsequently compared to a relatively new Windows 11 PC.

Come on Microsoft – you can do better than this. Frankly, the crafty way in which this particular part of Mehdi's blog post has been concocted smacks of desperation in the effort to persuade folks to upgrade to Windows 11.

What makes this worse is that, anecdotally for me, Windows 11 does indeed feel a bit snappier than Windows 10 in general everyday performance (although I realize that's a very subjective thing, and not everyone's experience going by some of the complaints I see online).

In short, I think Microsoft has a point to boast about here in terms of Windows 11 offering somewhat peppier performance, and being more responsive in some respects – but it's spoiled any of that positivity with an over-the-top marketing effort here.

You might also like...- Windows 11's hidden PC migration feature proves Microsoft isn't messing around when it comes to killing off Windows 10

- macOS Tahoe 26: here's everything you need to know about all the new features

- Can’t upgrade to Windows 11? This Linux project wants to save your old PC from the scrapheap when Windows 10 support ends

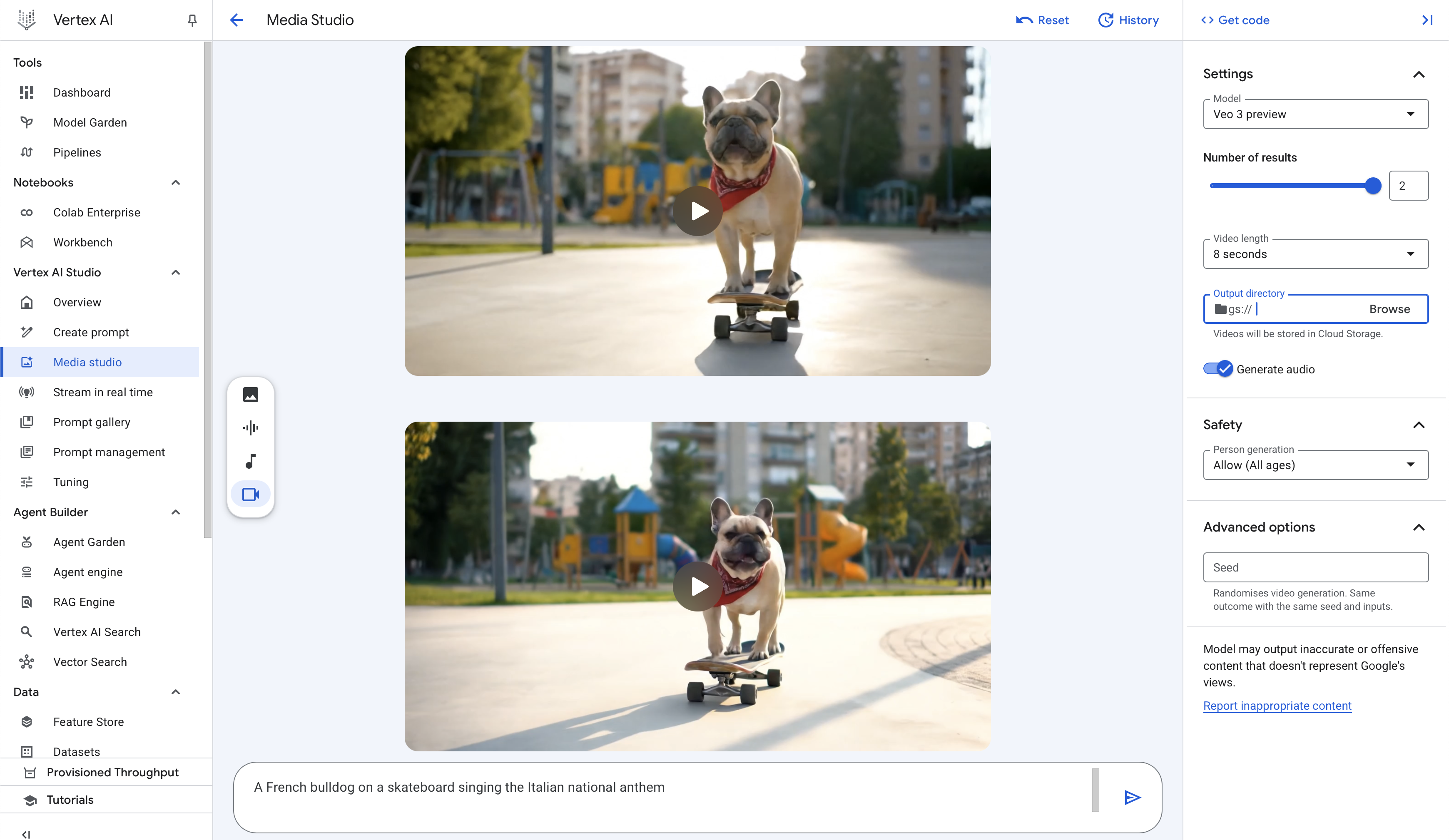

This simple trick gets you 3 months of Google Gemini Veo 3 for free - here’s how you can create the best AI videos without dropping a dime

- You can get access to Google's Veo 3 for free with this simple trick

- Sign up for a Google Cloud free trial with $300/£222 of credit and use Veo 3 directly from Vertex AI

- Generate 8-second clips with audio without spending a penny

Google's Veo 3 is everywhere, making it nearly impossible to tell what videos are real on social media anymore.

The clips may be limited to 8 seconds, but with the ability to generate audio too, and stitch multiple clips together, Veo 3 is well and truly the best AI video generator.

To use Veo 3, you normally need access to a paid Google AI subscription, but this neat workaround using a Google Cloud trial gives you $300 /£222 worth of Cloud Billing credits and a way to use Veo 3 through Vertex AI.

Sound complicated? Don't worry, it's super easy to set up, and you'll be generating AI video in no time without opening your wallet.

How to get access to Veo 3 for free

Head to the Google Cloud website and sign up for a free $300 credit trial to unlock higher limits in Vertex AI. You'll need to enter a billing address, and the credits will last for 90 days, but don't worry, you won't be charged unless you actively choose to subscribe.

Once you've signed up for your free Google Cloud trial, head to Vertex AI and select Media Studio. You'll now be able to generate Veo 3 videos without spending any money at all.

While Veo 3 is seriously impressive, it's worth noting that AI video generation uses a lot of energy, so if you're aiming to be sustainable with your AI usage, you may want to limit this tool to necessity, rather than generate AI slop.

That said, you're free to use Veo 3 how you please, it's really that simple - no purchase necessary.

You might also likeCloudflare Introduces Blocking of A.I. Scrapers By Default

The tech company’s customers can automatically block A.I. companies from exploiting their websites, it said, as it moves to protect original content online.

China Bans Some Portable Batteries From Flights as Safety Concerns Grow

Airlines and governments around the world have tightened restrictions on the devices after a series of accidents. The ban in China has caught some travelers off guard.

He Made Billions on Google and PayPal. Now, He’s Betting on News.

Michael Moritz co-founded The San Francisco Standard, a local news organization. It is acquiring Charter, a start-up focused on the future of work.

My favorite VR headset of 2025 has started selling out – here’s why you should grab one before it’s gone for good

- Xbox's limited-edition Quest 3S has sold out at Meta's store

- You can still find it at Best Buy in the US and Argos in the UK

- Meta has previously said that once it sells out it's gone for good

The Meta Quest 3S Xbox edition is sold out at Meta’s own store, but thankfully it’s still available to buy from Meta’s third-party partners in the US and UK, Best Buy, and Argos, for exactly the same price – although we don't know for how much longer that will be the case.

As a reminder, this a limited-edition headset drop, and one that I think you’ll want to take advantage of while you can.

Why, you ask?

Well, I’ve just spent too much of my weekend playing VR games on the headset using the included Xbox wireless controller, and attempting to binge the Xbox Game Pass catalog – I say attempting because my attention has been entirely captured by Indiana Jones and the Great Circle, and I can see why we gave it four stars.

Yes, you can do this with a regular Meta Quest 3S too, but because you need to acquire your own Xbox wireless controller rather than getting one with your headset the setup process has significantly more points of friction – even if you already have a controller, swapping it between your VR setup and whatever console/PC it was already connected to can be tedious, and enough of a hurdle to you put you off.

What’s more, not only is this limited-edition Meta Quest 3S a delight to use, and a delight to look at with its beautiful Xbox-ified black and green design, it’s also one of the best VR headset deals I’ve seen all year.

A great dealThat’s because each of its separate parts: the 128GB Meta Quest 3s, its Xbox wireless controller, the Elite strap, and 3-month Xbox Game Pass Ultimate subscription would collectively come to $494.85 / £464.94 if purchased separately.

By buying the bundle you’re not only getting an exclusive headset and controller design, you’re snagging a $94.86 / £84.86 saving – and that’s before you even consider the additional three months of free Meta Horizon+ which comes with all new Quest 3S purchases, which is worth $23.97 / £23.97 at $7.99 / £7.99 per month).

What’s more, unlike some bundles that are padded with unnecessary and unwanted extras, you’ll actually want to own each of the add-ons included with this headset.

Technically, this bundle is still full-price, but if you wanted to purchase each part of it individually you’d pay close to $100 more, and unlike some bundles, each component is worthwhile. The Elite strap adds extra comfort for your VR gaming sessions, while the Xbox controller and Game Pass subscription will let you play hit games on a giant virtual screen – plus the whole setup looks stunning.View Deal

This deal isn’t officially a discount, but if you were to buy every item on its own, you’d pay close to £90 more, so this is a really great deal. What’s more, each element of this bundle is worth owning, and that’s before you even begin to appreciate the gorgeous, unique black and green color scheme of the headset and its accessories, which is a draw on its own.View Deal

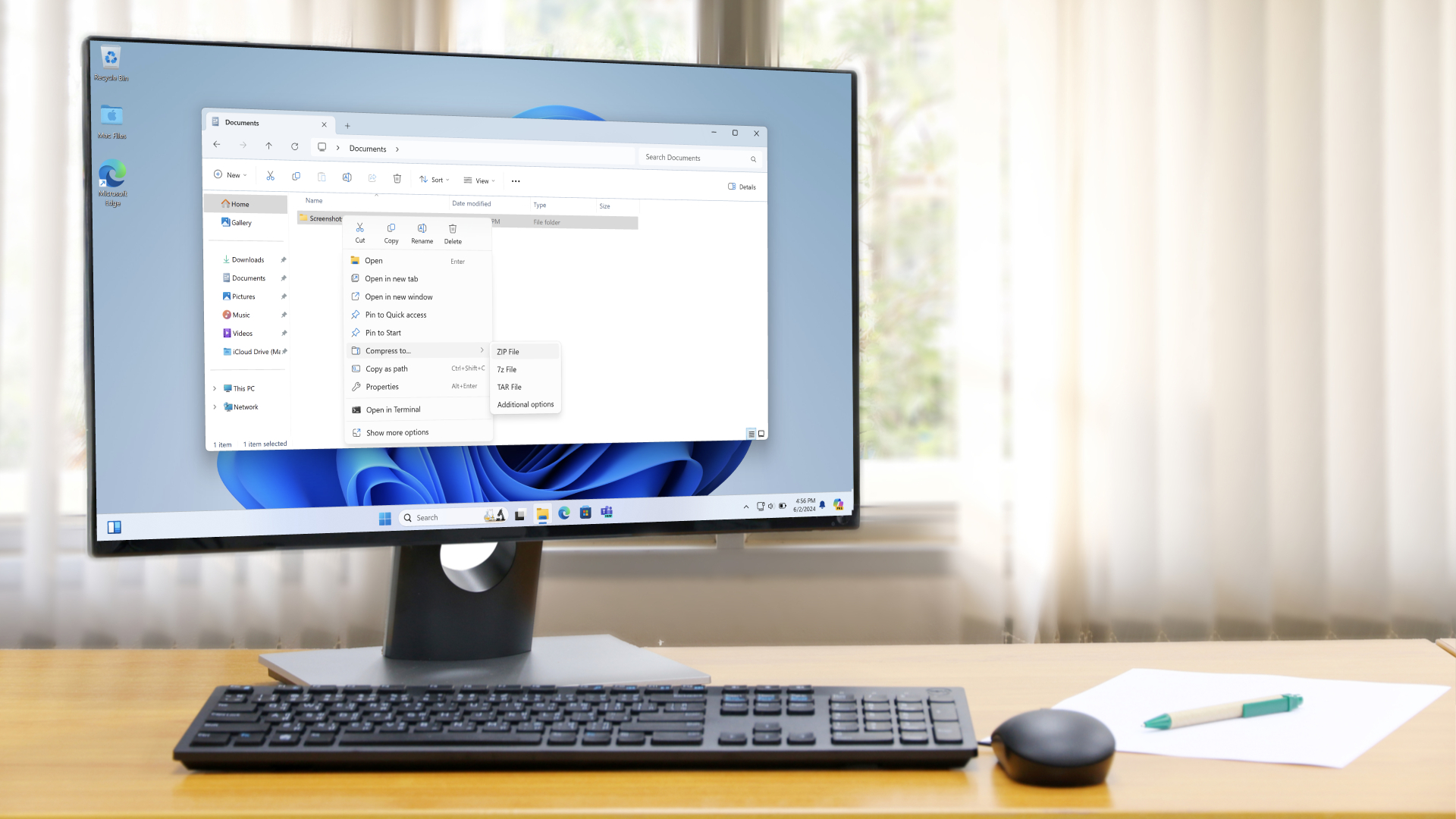

You might also likeI don't generally recommend downloading Windows 11's preview updates, but some gamers may want to chance Microsoft's latest effort

- Windows 11 has a new optional update

- It fixes a bunch of bugs that have been annoying gamers

- One of the most annoying glitches causes the monitor screen to go black for up to a few seconds when playing games, and it's now resolved

Windows 11's latest patch makes some important improvements for PC gamers, including the resolution of a seriously frustrating black screen glitch, and some useful changes are provided elsewhere, too.

This is the optional update for June (known as KB5060829) and, as Windows Latest reports, it introduces the mentioned fixes for gamers, as well as speeding up the performance of a common task under Windows 11 - namely, unzipping files.

Let's look at those gaming-related solutions first, though, and the highlight here is that Microsoft has cured a problem where "unnecessary display resets" were happening to some folks.

This bug manifests as the screen going black for up to a few seconds when gaming, and also in everyday use. As one Redditor puts it: "So this might fix that issue where my screen just randomly blinks black for a split second while browsing certain websites and playing games?"

That's very much the hope, and plenty of other Windows 11 gamers chime in to say they're suffering at the hands of this bug. There are a couple of reports from users claiming the fix has indeed worked, so that's a hopeful early sign. (Also remember that these fixes are gradually being rolled out, so not everyone will get the cure immediately.)

Microsoft also lets us know that there's a further fix for a problem whereby when graphics settings are changed in a game (or some apps), any off-putting screen flashing that occurs should be reduced.

Another resolution applied in the 'graphics' category for this optional update is the fix for some displays going "unexpectedly green," which I'm guessing is a reference to green screen crashes, rather than a visual corruption, though it could be the latter, and Microsoft doesn't clarify this. Either way, that'd be an annoying problem to face, and it should be vanquished now.

As already mentioned, also noteworthy here is better performance when unzipping files that are compressed in the 7z (7-Zip) or RAR formats that are natively supported in File Explorer with Windows 11. The speed boost is most noticeable in cases where large numbers of files are compressed, and they should be extracted more swiftly- Windows Latest observes that this happens up to 15% faster.

Other nifty moves with this preview update include the taskbar automatically resizing icons to fit in more apps when it gets crowded, and interestingly, we're also getting our first glimpse of the new PC-to-PC migration experience. The latter is provided in the Windows Backup app, and it's been in testing previously, offering an easy way to switch over to a new PC.

This is just the initial rollout, mind you, and the PC-to-PC migration feature won't be fully enabled yet. To begin with, we're just getting the landing page as a "first look at what's coming," and Microsoft notes that: "Support for this feature during PC setup will arrive in a future update."

However, near term, this will be more of a key feature for Windows 10 PCs, and Microsoft will want to push it through for those devices with the operating system's end-of-support deadline looming large. (Microsoft is very keen on getting those folks to upgrade to a new Windows 11 PC, which has caused quite some controversy in recent times).

Finally, another useful touch for gamers with this patch is a fix for some apps freezing up when Alt-Tabbing out of the game running in full-screen. A note of caution here: Windows Latest explains that a couple of folks testing this patch have experienced other weirdness when Alt-Tabbing out of games - like the mouse cursor lagging - but at this point, these are just scattered reports. I wouldn't read much into them yet.

Analysis: Take a chance, or wait it out?

Of course, this is a preview update, and as such, bugs - like the potential fresh problems with Alt-Tabbing - can be expected. Microsoft is still testing this patch ahead of its release next month, and that's why it's optional.

Because of this, I usually advise Windows 11 users to ignore these patches, especially as there isn't long to wait before the full update is deployed, and any last-minute bugs will (hopefully) be ironed out. In fact, this time around, the wait is particularly short, and the full update for Windows 11 in July arrives in just over a week.

Still, if any given issue is really driving you bananas, you may want to take your chances with the optional update right now (and hope you get lucky in terms of the rollout timeframe). And judging from the feedback on Reddit, a lot of people are really suffering at the hands of the bug causing the screen to go black for a second or three. If this happens at a crucial juncture of a game, it can be seriously frustrating, of course.

Indeed, plenty of Redditors are saying that this bug has been causing them to wonder what on earth is going wrong with their PC, and to suspect a fault with the GPU driver (which, given Nvidia's current woes along those lines, is an obvious conclusion to reach). At least we now know the problem is with Windows 11, and fingers crossed that this patch (and by extension the July release) fully resolves these temporary black screen dropouts.

You might also like...- AMD looks like it’s losing the GPU war based on new Steam survey, with Nvidia’s RTX 5060 Ti proving itself to be popular already

- macOS Tahoe 26: here's everything you need to know about all the new features

- Been hiding from Windows 11 24H2 due to the fuss about all the bugs? There’s nowhere to run now as Microsoft’s made the update compulsory

Microsoft confirms Windows 11 25H2, and in some ways, I'm glad we're not getting a major update like Windows 12 this year

- Microsoft has confirmed Windows 11 25H2 is the update for this year

- The upgrade is now officially in testing

- It'll be a more minor update delivered as an 'enablement package' and that's a good thing as we're likely to see fewer bugs than with 24H2

Microsoft has confirmed that Windows 11 25H2 is the next update for its desktop operating system, arriving later this year.

That ends whispers that we might just see the release of Windows 12 - or whatever the next incarnation of the OS will be called - later this year. However, hope of that had already dwindled to pretty much dying embers in all honesty.

The announcement came in an IT Pro blog post from Microsoft that Tom's Hardware flagged.

Microsoft told us: "Today, Windows 11, version 25H2 became available to the Windows Insider community, in advance of broader availability planned for the second half of 2025."

The Windows Insider community is the formal name for those who are testing Windows 11, running preview versions of the OS (in various channels, from the earliest builds in the Canary channel to the Release Preview channel, which, as the name suggests, is one step away from release).

So, some of those testers are now officially using Windows 11 25H2, and Microsoft further confirmed another suspicion that's been previously aired about the next big update for the operating system - that it's what's known as an 'enablement package' or 'eKB' for short.

This means that the move to 25H2 will be a swift upgrade for those who are on Windows 11 24H2, and as Microsoft puts it, the update will be "as easy as a quick restart".

The 25H2 update would typically be expected to arrive in September or October, and I wouldn't expect it any sooner - neither would I rule out the possibility of a November release. As ever, it'll be an ongoing rollout, so it could take some time to reach your PC.

Analysis: Fewer features, but fewer problems?

How does the enablement package delivery method Microsoft is employing here help to ensure a speedy and simple update? It's because 25H2 is built on the same 'servicing branch' as 24H2, meaning that they use the same code. They are, for all intents and purposes, the same, except 25H2 has some extra features added on top - and because these versions of Windows 11 are the same codebase, those features can effectively be preloaded to devices running 24H2.

What this means is that when it comes to applying the update, it's already in place, and it just has to be enabled. Hence the phrase 'enablement package', and with just a simple switch being flicked to turn on 25H2's features when the update is sent live, it's basically just a quick reboot, and you're done. At least in theory, anyway, barring any issues.

However, what this also means is that there'll be no major changes with Windows 11 25H2. An enablement package release is a fast and quick deployment, but doesn't change anything major with Windows 11's code, as noted, and so we will likely get a fairly limited dollop of new features with 25H2.

In short, don't get your hopes up for anything earthshaking this year regarding Microsoft's changes to Windows 11. However, the flipside is that without any major moves, there's far less chance of any nasty bugs popping up.

Windows 11 24H2 brought in a new underlying platform - Germanium - which was a huge shift, and my theory has long been that this is why we've seen more than the usual helping of critters skittering about in the works of the OS (and some very strange glitches, too). In 2025, that shouldn't happen, and hopefully, Microsoft will get back on course with ensuring Windows 11 runs more smoothly (knock on wood, fingers crossed, etc.).

You might also like...- Windows 11's hidden PC migration feature proves Microsoft isn't messing around when it comes to killing off Windows 10

- macOS Tahoe 26: here's everything you need to know about all the new features

- Can’t upgrade to Windows 11? This Linux project wants to save your old PC from the scrapheap when Windows 10 support ends

Pages

- « first

- ‹ previous

- 1

- 2

- 3