Do NOT follow this link or you will be banned from the site!

Feed aggregator

Could ChromeOS eventually run on your Android phone? Google’s demo of exactly that is an exciting hint for the future

A recent report has revealed that Google held a private demonstration that showed off a tailored version of ChromeOS, its operating system (OS) for Chromebooks, running on an Android device. Of course, Android is the operating system for Google's smartphones and tablets, while ChromeOS was developed for its line of Chromebook laptops and Chromebox desktop computers.

Unnamed sources spoke with Android Authority and shared that Google hosted a demo of a specially built Chromium OS (an open source version of ChromeOS hosted and developed by Google), given the codename ‘ferrochrome,’ showing this off to other companies.

The custom build was run in a virtual machine (think of this as a digital emulation of a device) on a Pixel 8, and while this Android smartphone was used as the hardware, its screen wasn't. The OS was projected to an external display, made possible by a recent development for the Pixel 8 that enables it to connect to an external display.

A recent report has revealed that Google held a private demonstration that showed off a tailored version of ChromeOS, its operating system for es it possible to run a secure and private execution environment for highly sensitive code. The AVF was developed for other purposes, but this demonstration showed that it could also be used to run other operating systems.

This demonstration is evidence that Google has the capability to run ChromeOS in Android, but there's no word, or remote hint, even, from Google that it has any plans to merge these two platforms. It also doesn't mean that the average Android device user will be able to swap over to ChromeOS, or that Google is planning to ship a version of its Pixel devices with ChromeOS either.

In short, don’t read much into this yet, but it’s significant that this can be done, and possibly telling that Google is toying with the idea in some way.

As time has gone on, Google has developed Android and ChromeOS to be more synergistic, notably giving ChromeOS the capability to run Android apps natively. In the past, you may recall Google even attempted to make a hybrid of Android and ChromeOS, with the codename Andromeda. However, work on that was shelved as the two operating systems were already seeing substantial success separately.

To put these claims to the test, Android Authority created its own ‘ferrochrome’ custom ChromeOS that it was able to run using a virtual machine on a Pixel 7 Pro, confirming that it's possible and providing a video of this feat.

For now, then, we can only wait and see if Google is going to explore this any further. But it’s already interesting to see Android Authority demonstrate this is possible, and that the tools to do this already exist if developers want to attempt it themselves. Virtualization is a popular method to run software originally built for another platform, and many modern phones have the hardware specs to facilitate it. It could also be a pathway for Google to improve the desktop mode for the upcoming Android 15, as apparently, the version seen in beta has some way to go.

YOU MIGHT ALSO LIKE...Google's answer to OpenAI's Sora has landed – here's how to get on the waitlist

Among the many AI treats that Google tossed into the crowd during its Google I/O 2024 keynote was a new video tool called Veo – and the waiting list for the OpenAI Sora rival is now open for those who want early access.

From Google's early Veo demos, the generative video tool certainly looks a lot like Sora, which is expected to be released "later this year." It promises to whip up 1080p resolution videos that "can [be] beyond a minute" and in different cinematic styles, from time-lapses to aerial drone shots. You can see an example further down this page.

Veo, which is the engine behind a broader tool from Google's AI Test Kitchen called VideoFX, can also help you edit existing video clips. For example, you can give it an input video alongside a command, and it'll be able to generate extra scenery – Google's example being the addition of kayaks to an aerial coastal scene.

But like Sora, Veo is also only going to be open to a select few early testers. You can apply to be one of those 'Trusted Testers' now using the Google Labs form. Google says it will "review all submissions on a rolling basis" and some of the questions –including one that asks you to link to relevant work – suggest it could initially only be available to digital artists or filmmakers.

Still, we don't know the exact criteria to be an early Veo tester, so it's well worth applying if you're keen to take it for a spin.

The AI video tipping pointVeo certainly isn't the first generative video tool we've seen. As we noted when the Veo launch first broke, the likes of Synthesia, Colossyan, and Lumiere have been around for a while now. OpenAI's Sora has also hit the mainstream with its early music videos and strange TED Talk promos.

These tools are clearly hitting a tipping point because even the relatively conservative Adobe has shown how it plans to plug generative AI video tools into its industry-standard editor Premiere Pro, again "later this year."

But the considerable computing power needed to run the likes of Veo's diffusion transformer models, and maintain visual consistency across multiple frames, is also a major bottleneck on a wider rollout, which explains why many are still in demo form.

Still, we're now reaching a point where these tools are ready to partially leap into the wild, and being an early beta tester is a good way to get a feel for them before the inevitable monthly subscriptions are defined and rolled out.

You might also likeWindows 11 update delivers a fix for broken VPNs – but also sees adverts infiltrate the Start menu

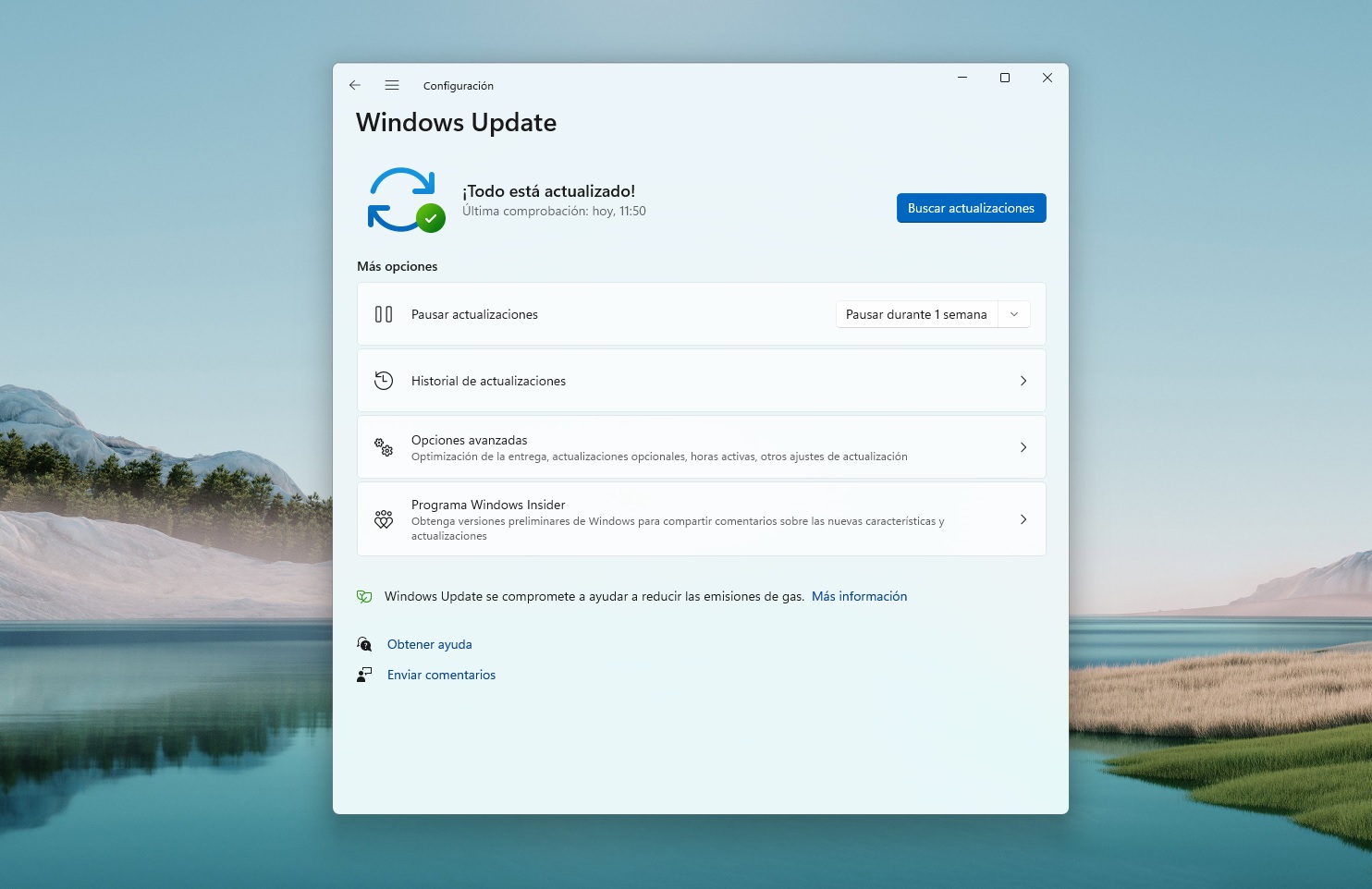

Happy Microsoft Patch Tuesday May 2024 to all who celebrate! Yesterday saw a new update for Windows 11 that brings fixes for existing issues, along with other changes, as part of Microsoft’s monthly patch cycle. Version KB5037771 is now available to Windows 11 users, and it brings some notable developments like new Start menu features and a fix for a previously reported VPN-related issue.

To get the new update, check your Windows Update app. Your system might have already downloaded the update and prepared it for installation, but if not, click on ‘Check for Updates.’ Note that this is a mandatory update that Microsoft would strongly urge you to install for security reasons, and it’s available for Windows 11 23H2 and 22H2 users.

One of the changes that update KB5037771 delivers is adverts embedded in the Start menu, as previously witnessed in preview builds (including last month’s optional update for Windows 11). The ads are presented as recommendations, highlighting certain apps from the Microsoft Store from a group of selected developers.

Explaining the rationale behind this move, Microsoft says it’s intended to help users discover apps that they might find useful or entertaining that they may not be aware of. If you don’t find a particular ad (sorry, ‘recommendation’) helpful, Windows Latest observes that you can dismiss it by right-clicking on it.

You can go further and block these ads for recommended third-party apps in your Start menu by going to the following location:

Settings > Personalization > Start

Here, you can switch off the toggle next to the ‘Show recommendations for tips, app promotions, and more’ option, and this will prevent these promotions or recommendations, or whatever you want to call them (adverts, ahem), from appearing.

Further note that in the Recommended section of the Start menu, Microsoft has also started showing apps that you use frequently but don’t have pinned in your Start menu or taskbar.

These changes to the Start menu are due to begin rolling out to users in the coming weeks, but some people might not be shown the ads depending on the region they’re in, Windows Latest reports - noting that those in Europe may not get the adverts (lucky them).

The update also incorporates a fix for a problem that caused VPN connection failures, which were seemingly a side-effect of the April 2024 cumulative update. Some users reported issues with certain VPN connections, and Microsoft has since acknowledged the problems and added that they should be resolved after this new May 2024 update.

Other changes that KB5037771 brings include upgraded MSN cards on your lock screen and new widget animations.

It feels like a broken record at this point, writing about Microsoft pushing forward with this advertising strategy in Windows 11. Is there any chance the software giant will rethink this policy? It doesn’t seem likely, and if anything, I can see Microsoft continuing to integrate ads into more and more places in Windows 11.

Okay, so in this case, the adverts can be turned off, which is at least something - but I fear that Microsoft is going to continue in this direction, unless it starts getting a lot of negative feedback.

YOU MIGHT ALSO LIKE...- Ads in Windows 11 are becoming the new normal and look like they’re headed for your Settings home page

- Windows 11 or bust: Microsoft is boldly and insistently urging Windows 10 users to move on

- Haven’t activated Windows 11? Then you might find yourself locked out of some Microsoft Edge browser settings

Smartphones Can Now Last 7 Years. Here’s How to Keep Them Working.

Google and Samsung used to update smartphone software for only three years. That has changed.

AMD could drop support for Windows 10 with Zen 5 Strix Point CPUs – a cold, hard reminder the OS is on its way out

Windows 10’s days are numbered – well, we know that, as support runs out next year (with some potentially nasty consequences) – but it seems AMD is underlining that with its incoming Strix Point APUs, at least if a fresh rumor is right.

If a well-connected person posting on Weibo is correct – this is seemingly Lenovo’s China manager, as TechSpot reports – then the release of Zen 5-based Strix Point chips will witness Team Red drop support for Windows 10 drivers.

That means these incoming APUs – a fancy term for all-in-one processors, with integrated graphics and an NPU bundled up together – will only be good for Windows 11 PCs.

The manager also estimates that the IPC (Instructions per Clock) increase for Zen 5 CPUs will be around 10%, which is notably lower than some other estimates we’ve seen thus far (which are more in the 15% to 20% area, or even slightly higher).

Naturally, as this is a rumor from Weibo – not our most favored source for speculation – heap on the seasoning with this one.

Analysis: It’s all about AIWindows 10 is, of course, still a lot more popular than Windows 11. Indeed, Microsoft’s newer OS has struggled to attract users since it was first released, and still Windows 11 only has 26% of Windows market share going by the latest statistics from Statcounter – with Windows 10 maintaining the lion’s share.

Even in gaming, Windows 11 might be doing much better than with everyday users, but it’s still behind Windows 10 (albeit the newer platform is closing on 50% finally, according to the latest Steam hardware survey).

So why would AMD look to abandon Windows 10 early – if, indeed, this is happening? Well, for starters, Windows 10 is on its way out of the door next year, as we already noted (support ends in October 2025).

Also, as TechSpot observes, Strix Point chips are at least partly about pushing hard with AI – and getting close to 80 TOPS (trillions of operations per second). And when it comes to AI, Windows 11 is very much where it’s at – the revolutionary piece of Microsoft’s puzzle, purportedly ‘AI Explorer,’ will be introduced with the 24H2 update, and this will need those faster chips capable of pushing further with TOPS to make AI functionality run swiftly enough.

In short, we need to remember that Strix Point will be powering AI PCs and new devices that’ll have Windows 11 anyway.

You might also likeDon’t worry, Google Gemini’s new bank-scam detection for phone calls isn’t as creepy as it sounds

Google I/O 2024 brought forth a huge array of new ideas and features from Google, including some useful life hacks for the lazy, and AI was present almost everywhere you looked. One of the more intriguing new features can detect scam calls as they happen, and warn you not to hand over your bank details – and it’s not as creepy as it might at first sound.

The feature works like this: if you get a call from a scammer pretending to be a representative of your bank, Google Gemini uses its AI smarts to work out that the impersonator is not who they claim to be. The AI then sends you an instant alert warning you not to hand over any bank details or move any money, and suggests that you hang up.

Involving AI in the process raises a few pertinent questions, such as whether your bank details ever get sent to Google’s servers for processing as they're detected. That’s something you never want to happen, because you don’t know who can access your bank information or what they might do with it.

Fortunately, Google has confirmed (via MSPoweruser) that the whole process takes place on your device. That means there’s no way for Google (or anyone else) to lift your banking information off a server and use it for their own ends. Instead, it stays safely sequestered away from prying eyes.

A data privacy minefield

Google’s approach cuts to the core of many concerns about the growing role AI is playing in our lives. Because most AI services need huge server banks to process and understand all the data that comes their way, that means you can end up sending a lot of sensitive data to an opaque destination, with no way of really knowing what happens to it.

This has had real-world consequences. In March 2024, AI service Cutout.Pro was hacked and lost the personal data of 20 million users, and it’s far from the only example. Many firms are worried that employees may inadvertently upload private company data when using AI tools, which then gets fed into the AI as training data – potentially allowing it to fall into the hands of users outside the business. In fact, exactly that has already happened to Samsung and numerous other companies.

This all goes to show the importance of keeping private data away from AI servers as much as possible – and, given the potential for AI to become a data privacy minefield, Google’s decision to keep your bank details on-device is a good one.

You might also likeInside OpenAI’s Library

OpenAI may be changing how the world interacts with language. But inside headquarters, there is a homage to the written word: a library.

Senators Propose $32 Billion in Annual A.I. Spending but Defer Regulation

Their plan is the culmination of a yearlong listening tour on the dangers of the new technology.

Few Chinese Electric Cars Are Sold in U.S., but Industry Fears a Flood

Automakers in the United States and their supporters welcomed President Biden’s tariffs, saying they would protect domestic manufacturing and jobs from cheap Chinese vehicles.

OpenAI’s Chief Scientist, Ilya Sutskever, Is Leaving the Company

In November, Ilya Sutskever joined three other OpenAI board members to force out Sam Altman, the chief executive, before saying he regretted the move.

Darren Criss to Return to Broadway as a Robot in Love

The actor will star in “Maybe Happy Ending,” an original musical set in a future Seoul. It will begin previews in September.

Google Unveils AI Overviews Feature for Search at 2024 I/O Conference

The tech giant showed off how it would enmesh A.I. more deeply into its products and users’ lives, from search to so-called agents that perform tasks.

Google Search is getting a massive upgrade – including letting you search with video

Google I/O 2024's entire two-hour keynote was devoted to Gemini. Not a peep was uttered for the recently launched Pixel 8a or what Android 15 is bringing upon release. The only times a smartphone or Android was mentioned is how they are being improved by Gemini.

The tech giant is clearly going all-in on the AI, so much so that the stream concludes by boldly displaying the words “Welcome to the Gemini era”.

Among all the updates that were presented at the event, Google Search is slated to gain some of the more impressive changes. You could even argue that the search engine will see one of the most impactful upgrades in 2024 that it’s ever received in its 25 years as a major tech platform. Gemini gives Google Search a huge performance boost, and we can’t help but feel excited about it.

Below is a quick rundown of all the new features Google Search will receive this year.

1. AI Overviews

The biggest upgrade coming to the search engine is AI Overviews which appears to be the launch version of SGE (Search Generative Experience). It provides detailed, AI-generated answers to inquiries. Responses come complete with contextually relevant text as well as links to sources and suggestions for follow-up questions.

Starting today, AI Overviews is leaving Google Labs and rolling out to everyone in the United States as a fully-fledged feature. For anyone who used the SGE, it appears to be identical.

Response layouts are the same and they’ll have product links too. Google has presumably worked out all the kinks so it performs optimally. Although when it comes to generative AI, there is still the chance it could hallucinate.

There are plans to expand AI Overviews to more countries with the goal of reaching over a billion people by the end of 2024. Google noted the expansion is happening “soon,” but an exact date was not given.

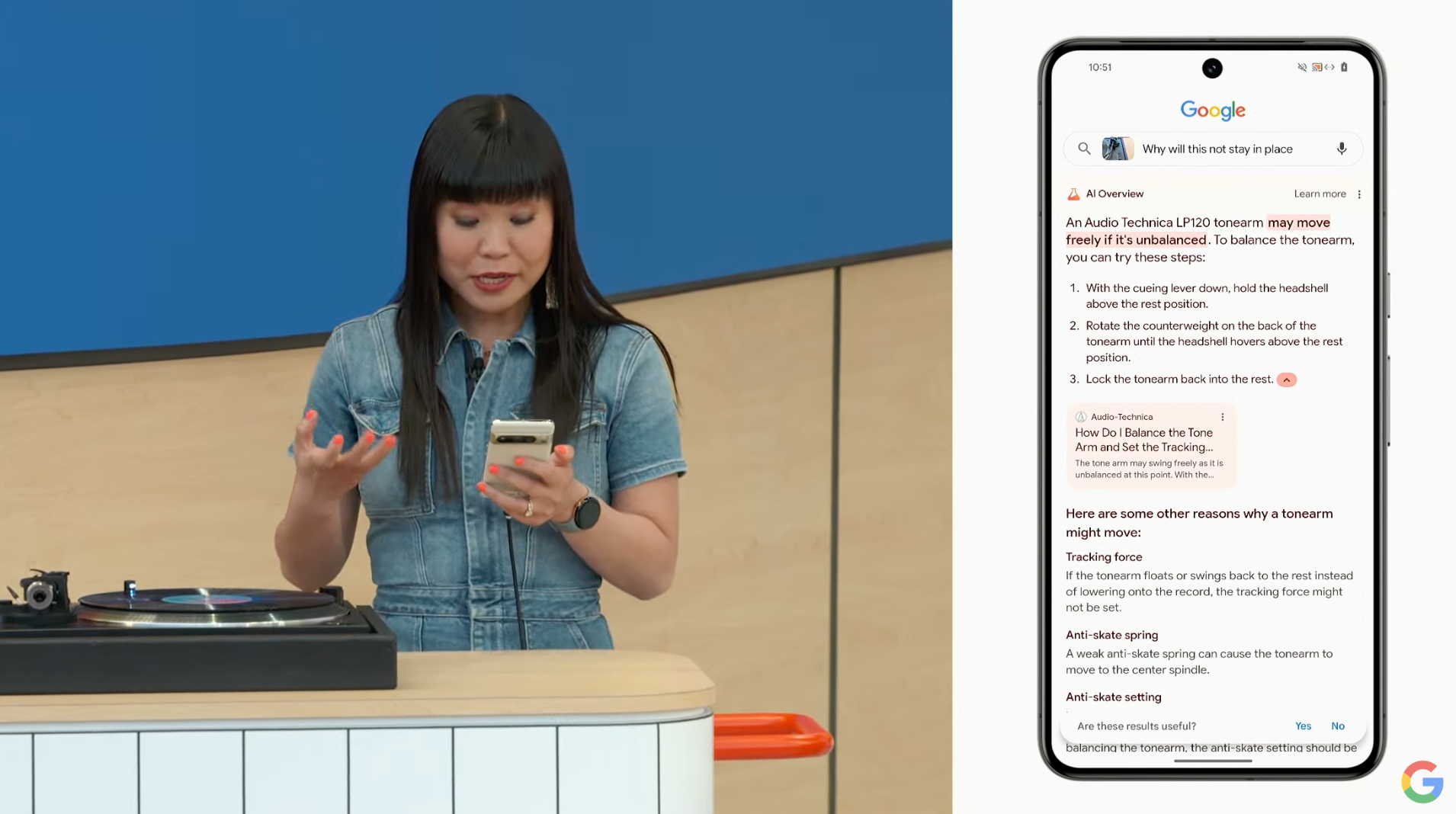

2. Video Search

AI Overviews is bringing more to Google Search than just detailed results. One of the new features allows users to upload videos to the engine alongside a text inquiry. At I/O 2024, the presenter gave the example of purchasing a record player with faulty parts.

You can upload a clip and ask the AI what's wrong with your player, and it’ll provide a detailed answer mentioning the exact part that needs to be replaced, plus instructions on how to fix the problem. You might need a new tone arm or a cueing lever, but you won't need to type in a question to Google to get an answer. Instead you can speak directly into the video and send it off.

Searching With Video will launch for “Search Labs users in English in the US,” soon with plans for further expansion into additional regions over time.

3. Smarter AI

Next, Google is introducing several performance boosts; however, none of them are available at the moment. They’ll be rolling out soon to the Search Labs program exclusively to people in the United States and in English.

First, you'll be able to click one of two buttons at the top to simplify an AI Overview response or ask for more details. You can also choose to return to the original answer at any time.

Second, AI Overviews will be able to understand complex questions better than before. Users won’t have to ask the search engine multiple short questions. Instead, you can enter one long inquiry – for example, a user can ask it to find a specific yoga studio with introductory packages nearby.

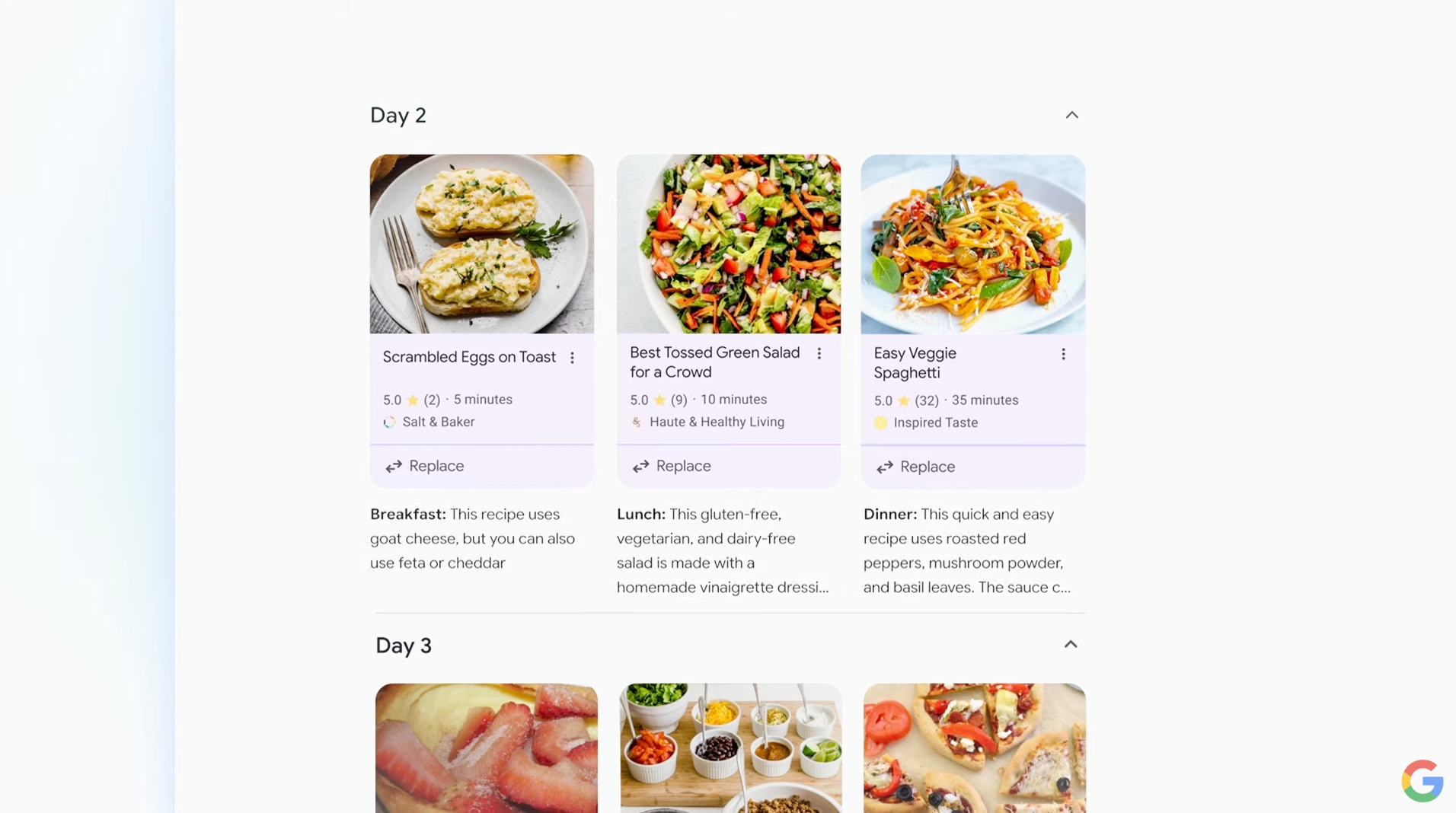

Lastly, Google Search can create "plans" for you. This can be either a three-day meal plan that’s easy to prepare or a vacation itinerary for your next trip. It’ll provide links to the recipes plus the option to replace dishes you don't like. Later down the line, the planning tool will encompass other topics like movies, music, and hotels.

All about GeminiThat’s pretty much all of the changes coming to Google Search in a nutshell. If you’re interested in trying these out and you live in the United States, head over to the Search Labs website, sign up for the program, and give the experimental AI features a go. You’ll find them near the top of the page.

Google I/O 2024 dropped a ton of information on the tech giant’s upcoming AI endeavors. Project Astra, in particular, looked very interesting, as it can identify objects, code on a monitor, and even pinpoint the city you’re in just by looking outside a window.

Ask Photos was pretty cool, too, if a little freaky. It’s an upcoming Google Photos tool capable of finding specific images in your account much faster than before and “handle more in-depth queries” with startling accuracy.

If you want a full breakdown, check out TechRadar's list of the seven biggest AI announcements from Google I/O 2024.

You might also like- Google is 'reimagining' Android to be all-in on AI – and it looks truly impressive

- Google reveals new video-generation AI tool, Veo, which it claims is the 'most capable' yet – and even Donald Glover loves it

- I shot the northern lights with Google Pixel's Astrophotography mode and a mirrorless camera – here's which one did best

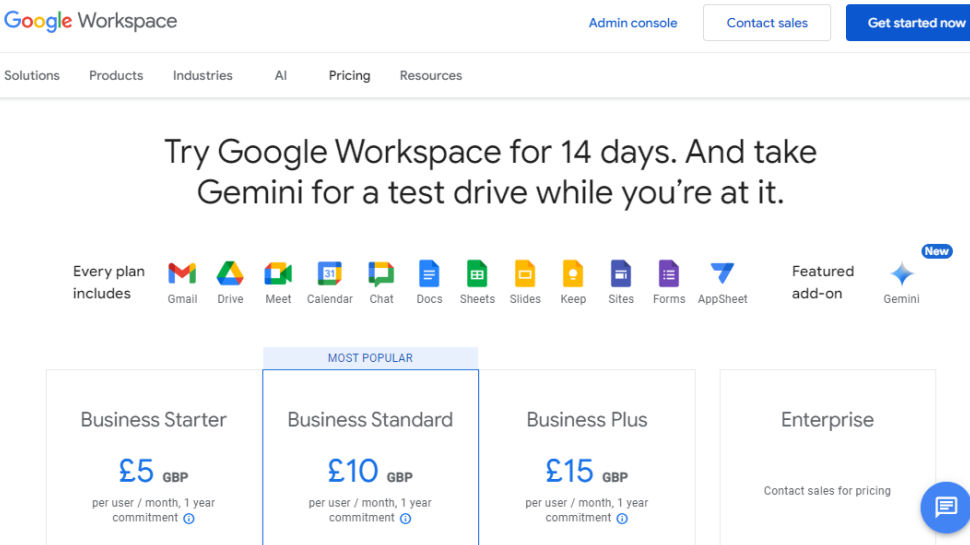

Google Workspace is getting a talkative tool to help you collaborate better – meet your new colleague, AI Teammate

If your workplace uses Google Workspace productivity suite of apps, then you might soon get a new teammate - an AI Teammate that is.

In its mission to improve our real-life collaboration, Google has created a tool to pool shared documents, conversations, comments, chats, emails, and more into a singular virtual generative AI chatbot: the AI Teammate.

Powered by Google's own Gemini generative AI model, AI Teammate is designed to help you concentrate more on your role within your organization and leave the tracking and tackling of collective assignments and tasks to the AI tool.

This virtual colleague will have its own identity, its own Workspace account, and a specifically defined role and objective to fulfil.

When AI Teammate is set up, it can be given a custom name, as well as have other modifications, including its job role, a description of how it's expected to help your team, and specific tasks it's supposed to carry out.

In a demonstration of an example AI Teammate at I/O 2024, Google showed a virtual teammate named 'Chip' who had access to a group chat of those involved in presenting the I/O 2024 demo. The presenter, Tony Vincent, explained that Chip was privy to a multitude of chat rooms that had been set up as part of preparing for the big event.

Vincent then asks Chip if I/O storyboards had been approved – the type of question you'd possibly ask colleagues – and Chip was able to answer as it can analyze all of these conversations that it had been keyed into.

As AI Teammate is added to more threads, files, chats, emails, and other shared items, it builds a collective memory of the work shared in your organization.

In a second example, Vincent shows another chatroom for an upcoming product release and asks the room if the team is on track for the product's launch. In response, AI Teammate searches through everything it has access to like Drive, chat messages, and Gmail, and synthesizes all of the relevant information it finds to form its response.

When it's ready (which looks like about a second or slightly less), AI Teammate delivers a digestible summary of its findings. It flagged up a potential issue to make the team aware, and then gave a timeline summary, showing the stages of the product's development.

As the demo is taking place in a group space, Vincent stated that anyone can follow along and jump in at any point, for example asking a question about the summary or for AI Teammate to transfer its findings into a Doc file, which it does as soon as the Doc file is ready.

AI Teammate becomes as useful as it's customized to be and Google promises that it can make your collaborative work seamless, being integrated into Google's host of existing products that many of us are already used to.

YOU MIGHT ALSO LIKE...- Google's Project Astra could supercharge the Pixel 9 – and help Google Glass make a comeback

- Google reveals new video-generation AI tool, Veo, which it claims is the 'most capable' yet – and even Donald Glover loves it

- Google I/O showcases new 'Ask Photos' tool, powered by AI – but it honestly scares me a little

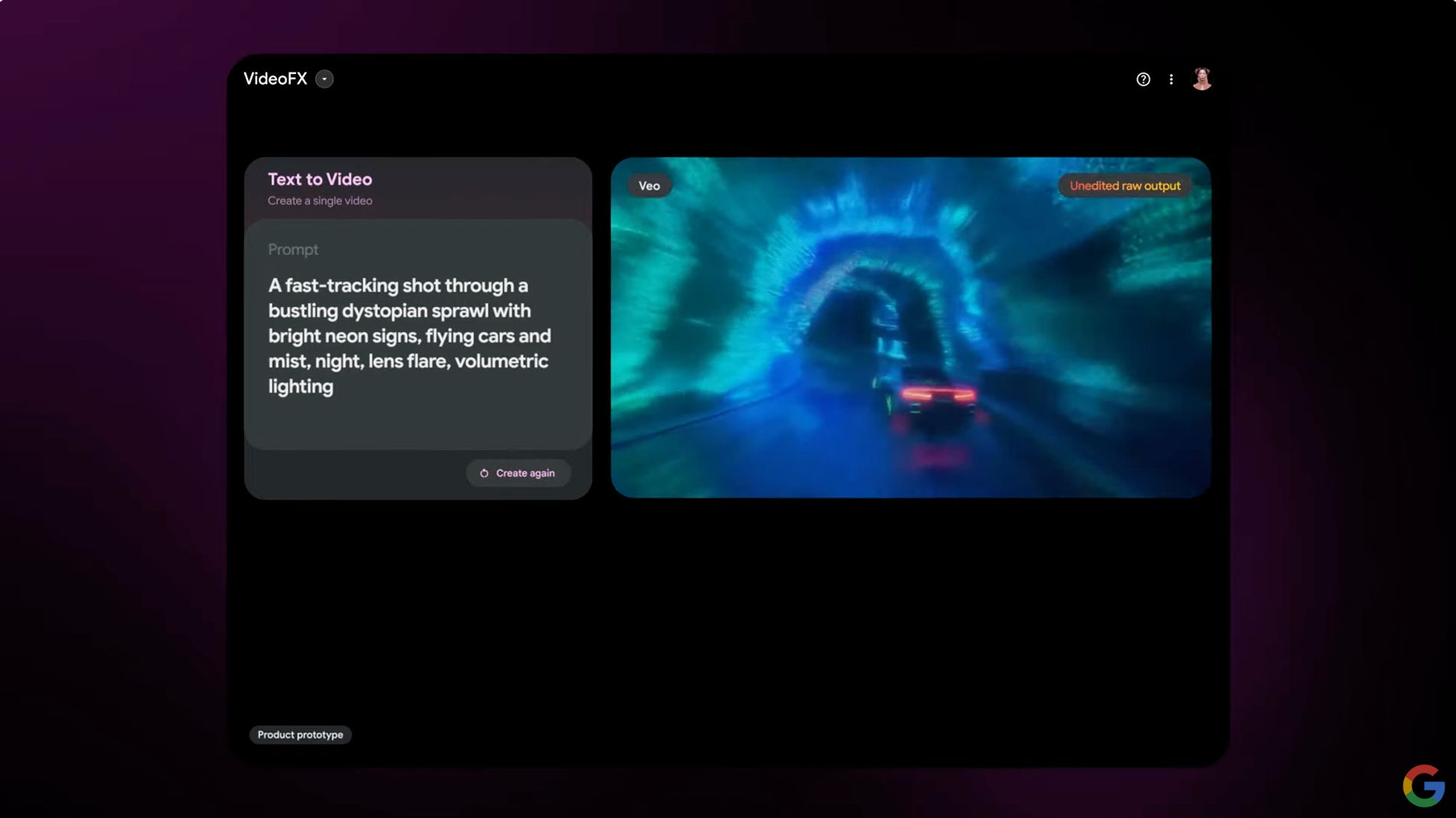

Google reveals new video-generation AI tool, Veo, which it claims is the 'most capable' yet – and even Donald Glover loves it

Google has unveiled its latest video-generation AI tool, named Veo, at its Google I/O 2024 live event. Veo is described as offering "improved consistency, quality, and output resolution" compared to previous models – and it's one of the more intriguing announcements from this year's Google I/O show.

Generating video content with AI is nothing new; tools like Synthesia, Colossyan, and Lumiere have been around for a little while now, riding the wave of generative AI's current popularity. Veo is only the latest offering, but it promises to deliver a more advanced video-generation experience than ever before.

To showcase Veo, Google recruited a gang of software engineers and film creatives, led by actor, musician, writer, and director Donald Glover (of Community and Atlanta fame) to produce a short film together. The film wasn't actually shown at I/O, but Google promises that it's "coming soon".

As someone who is simultaneously dubious of generative AI in the arts and also a big fan of Glover's work (Awaken, My Love! is in my personal top five albums of all time), I'm cautiously excited to see it.

Eye spyGlover praises Veo's capabilities on the basis of speed: this isn't a deletion of human ideas, but rather a tool that can be utilized by creatives to "make mistakes faster", as Glover puts it.

The flexibility of Veo's prompt reading is a key point here. It's capable of understanding prompts in text, image, or video format, paying attention to important details like cinematic style, camera positioning (for example, a birds-eye-view shot or fast-tracking shot), time elapsed on camera, and lighting types. It also has an improved capability to accurately and consistently render objects and how they interact with their surroundings.

Google DeepMind CEO Demis Hassabis demonstrated this with a clip of a car speeding through a dystopian cyberpunk city.

It can also be used for things like storyboarding and editing, potentially augmenting the work of existing filmmakers. While working with Glover, Google DeepMind research scientist Kory Mathewson explains how Veo allows creatives to "visualize things on a timescale that's ten or a hundred times faster than before", accelerating the creative process by using generative AI for planning purposes.

Veo will be debuting as part of a new experimental tool called VideoFX, which will be available soon for beta testers in Google Labs.

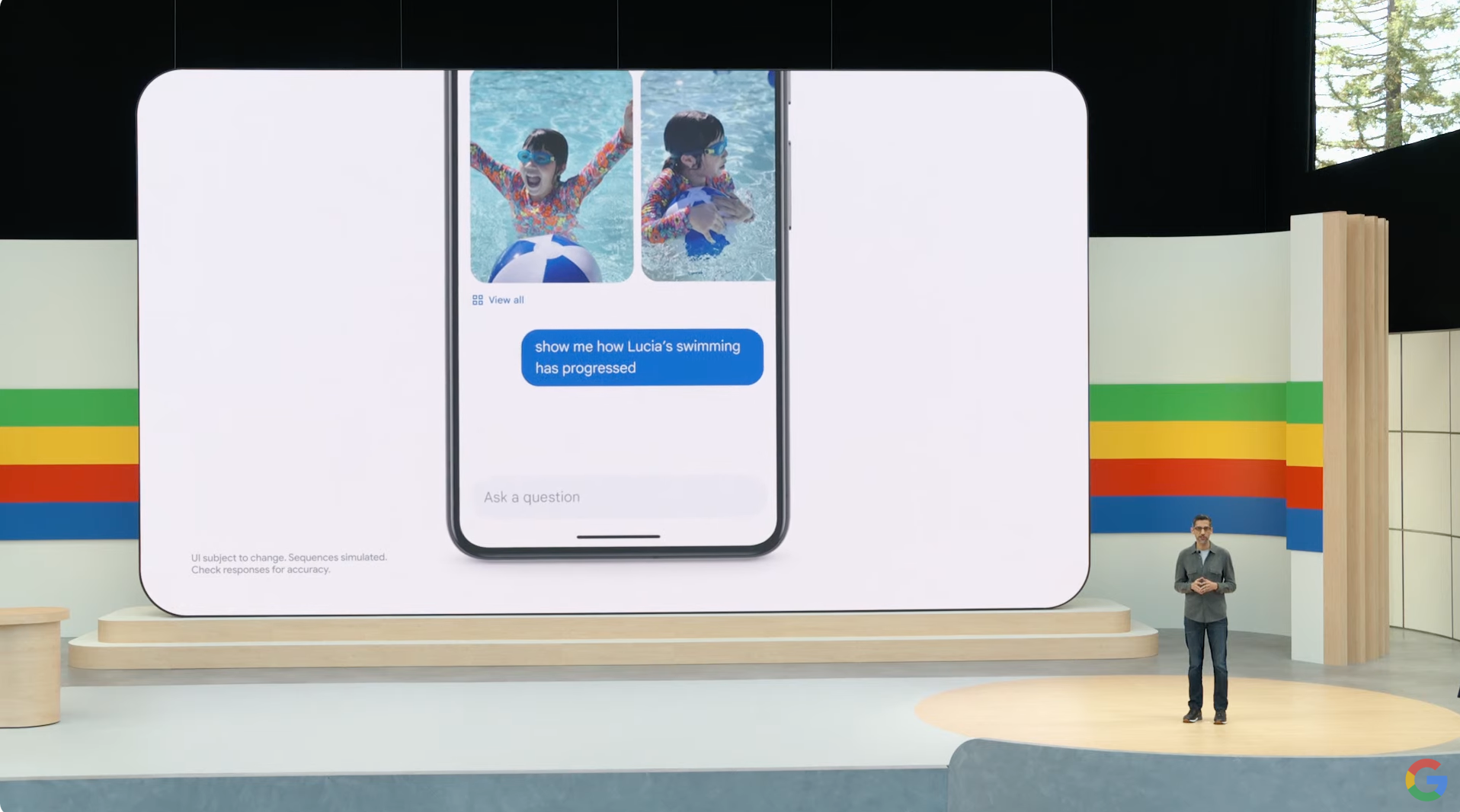

Google I/O showcases new 'Ask Photos' tool, powered by AI – but it honestly scares me a little

At the Google I/O 2024 keynote today, CEO Sundar Pichai debuted a new feature for the nine-year-old Google Photos app: 'Ask Photos', an AI-powered tool that acts as an augmented search function for your photos.

The goal here is to make finding specific photos faster and easier. You ask a question – Pichai's example is 'what's my license plate number' – and the app uses AI to scan through your photos and provide a useful answer. In this case, it isolates the car that appears the most, then presents you with whichever photo shows the number plate most clearly.

It can reportedly handle more in-depth queries, too: Pichai went on to explain that if your hypothetical daughter Lucia has been learning to swim, you could ask the app to 'show me how Lucia's swimming has progressed', and it'll present you with a slideshow showcasing Lucia's progression. The AI (powered by Google's Gemini model) is capable of identifying the context of images, such as differentiating between swimming in a pool and snorkeling in the ocean, and even highlighting the dates on photos of her swimming certificates.

While the Photos app already had a search function, it was fairly rudimentary, only really capable of identifying text within images and retrieving photos from selected dates and locations.

Ask Photos is apparently "an experimental feature" that will start to roll out "soon", and it could get more features in the future. As it is, it's a seriously impressive upgrade – so why am I terrified of it?

Eye spyA major concern surrounding AI models is data security. Gemini is a predominantly cloud-based AI tool (its data parameters are simply too large to be run locally on your device), which introduces a potential security vulnerability as your data has to be sent to an external server via the internet, a flaw that doesn't exist for on-device AI tools.

Ask Photos is powerful enough to not only register important personal details from your camera roll, but also understand the context behind them. In other words, the Photos app – perhaps one of the most innocuous apps on your Android phone's home screen – just became the app that potentially knows more about your life than any other.

I can't be the only person who saw this revealed at Google I/O and immediately thought 'oh, this sounds like an identity thief's dream'. How many of us have taken a photo of a passport or ID to complete an online sign-up? If malicious actors gain remote access to your phone or are able to intercept your Ask Photos queries, they could potentially take better advantage of your photo library than ever before.

Google says it's guarding against this kind of scenario, stating that "The information in your photos can be deeply personal, and we take the responsibility of protecting it very seriously. Your personal data in Google Photos is never used for ads. And people will not review your conversations and personal data in Ask Photos, except in rare cases to address abuse or harm."

It continues that "We also don't train any generative AI product outside of Google Photos on this personal data, including other Gemini models and products. As always, all your data in Google Photos is protected with our industry-leading security measures."

So, nothing to worry about? We'll see. But quite frankly... I don't need an AI to help me manage my photo library anyway. Honestly Google, it really isn't that hard to make some folders.

You might also like- Google I/O 2024 live blog: breaking news about Gemini AI, Pixel, Android 15 and more

- ChatGPT’s big, free update with GPT-4o is rolling out now – here’s how to get it

- What is Google Gemini? Everything you need to know about Google’s next-gen AI

Google's Project Astra could supercharge the Pixel 9 – and help Google Glass make a comeback

I didn't expect Google Glass to make a minor comeback at Google I/O 2024, but it did, thanks to Project Astra.

That's Google's name for a new prototype of AI agents, underpinned by the Gemini multimodal AI, that can make sense of video and speech inputs, and smartly react to what a person is effectively looking at and answer queries about it.

Described as a "universal AI" that can be "truly helpful in everyday life", Project Astra is designed to be proactive, teachable, and able to understand natural language. And in a video ,Google demonstrated this with a person using what looked like a Pixel 8 Pro with the Astra AI running on it.

By pointing the phone's camera at room, the person was able to ask Astra to "tell me when you see something that makes sound", to which the AI will flagged a speaker it can see within the camera's viewfinder. From there the person was able to ask what a certain part of the speaker was, with the AI replying that the part in question is a tweeter and handles high frequencies.

But Astra does a lot more: it can identify code on a monitor and explain what it does, and it can work out where someone is in a city and provide a description of that area. Heck, when promoted, it can even make an alliterative sentence around a set of crayons in a fashion that's a tad Dr Zeus-like.

It can can even recall where the user has left a pair of glasses, as the AI remembers where it saw them last. It was able to do the latter as AI is designed to encode video frames of what it's seen, combine that video with speech inputs and put it all together in a timeline of events, caching that information so it can recall it later at speed.

Then flipping over to a person wearing the Google Glass 'smart glasses', Astra could see that the person was looking at a diagram of a system on a whiteboard, and figure out where optimizations could be made when asked about them.

Such capabilities suddenly make Glass seem genuinely useful, rather than the slightly creepy and arguably dud device it was a handful of years ago; maybe we'll see Google return to the smart glasses arena after this.

Project Astra can do all of this thanks to using multimodal AI, which in simple terms is a mix of neural network models that can process data and inputs from multiple sources; think mixing information from cameras and microphones with knowledge the AI has already been trained on.

Google didn't say when Project Astra will make it into products, or even into the hands of developers, but Google's DeepMind CEO Demis Hassabis said that "some of these capabilities are coming to Google products, like the Gemini app, later this year." I'd be very surprised if that doesn't mean the Google Pixel 9, which we're expecting to arrive later this year.

Now it's worth bearing in mind that Project Astra was shown off in a very slick video, and the reality of such onboard AI agents is they can suffer from latency. But it's a promising look at how Google will likely integrate actually useful AI tools into its future products.

You might also likeCan Google Give A.I. Answers Without Breaking the Web?

Publishers have long worried that artificial intelligence would drive readers away from their sites. They’re about to find out if those fears are warranted.

A.I.’s ‘Her’ Era Has Arrived

New chatbot technology can talk, laugh and sing like a human. What comes next is anyone’s guess.

OpenAI just snubbed Windows 11 users with its Mac-only ChatGPT app – here’s why

OpenAI just announced its new GPT-4o (‘o’ for ‘omni’) model which combines text, video, and audio processing in real-time to answer questions, hold better conversations, solve maths problems, and more. It’s the most ‘human’-like iteration of the large language model (LLM) so far, available to all users for free shortly. GPT-4o has launched with a macOS app for ChatGPT Plus subscribers to try - but interestingly, there’s no Windows app just yet.

A blog post from OpenAI specifies that the company “plan[s] to launch a Windows version later this year,” choosing instead to offer the tech to Mac users first. This is odd, considering Microsoft has pumped billions of dollars into OpenAI and has its own OpenAI-powered digital assistant, Copilot. So, you would think the platform to receive initial exclusive access to a groundbreaking bit of tech like GPT-4o would be Microsoft Windows.

Why do things this way around? One theory floated by Windows Latest is that this could be a clever move on OpenAI’s part as Apple users might prefer a native app over a web app compared to Windows users. As an Apple user, I would indeed prefer to have an app for something I might use as regularly as GPT-4o, rather than having to navigate a web app - so perhaps other Apple fans may feel the same.

A further consideration here is with AI Explorer incoming as the big feature for Windows 11 later this year (in the 24H2 update), Microsoft may not want another feature like GPT-4o muddying the AI waters in its desktop OS.

Jumping in before Apple canWith such a jump between the public version of ChatGPT and the new GPT-4o model (which is also set to be available for free, albeit with limited use), OpenAI will surely want as many people using its product as possible. So, venturing into macOS territory makes sense if the firm wants to tap into a group of people who haven’t gravitated to its AI naturally.

So far Apple has not made any great efforts to integrate AI tools into its operating system in the same way that Microsoft has Copilot embedded into a user’s desktop taskbar. That leaves OpenAI with the perfect opportunity to jump onto the desktops of Mac users and show off what GPT-4o can do before Apple gets the chance to introduce its own AI assistant for macOS - if it does so.

We'll have to wait for WWDC to find out if Apple has its own take on the Copilot concept ready or if Mac users interested in artificial intelligence tools will find a new bestie in GPT-4o. That’s not to say I wouldn’t eat up whatever Apple has up its sleeve for Mac users - just that swapping over may be a little harder once I’m used to the way GPT-4o for Mac works for me.

You might also like...