Do NOT follow this link or you will be banned from the site!

Feed aggregator

OpenAI Unveils New ChatGPT That Listens, Looks and Talks

Chatbots, image generators and voice assistants are gradually merging into a single technology with a conversational voice.

Apple and Google have teamed up to help make it easier to spot suspicious item trackers

As part of iOS 17.5, Apple is finally rolling out its Detecting Unwanted Location Trackers specification, allowing mobile users to locate suspiciously placed AirTags and other similar devices. Simply, this update has been a long time coming.

To give a quick breakdown of the situation at large, Bluetooth trackers were being used as a way to stalk people. Google announced that it and Apple were teaming up to tackle this problem. The former sought to upgrade its Find My Device network quickly but decided to postpone the launch, partly to wait until Apple finished developing its new standard.

With iOS 17.5, though, Apple states that your iPhone will notify you if an unknown Bluetooth tracker device was placed on you. If it sniffs something out, an “[Item] Found Moving With You” alert will appear on the smartphone screen.

Upon detection, your iPhone can trigger a noise on the tracker to help you locate it. An accompanying notification will include a guide showing you how to disable the gadget. You can find these instructions on Apple’s support website.

Supporting devicesThe detection tool can locate other Find My accessories so long as the third-party trackers are built to the specifications that Apple and Google are on. Devices not on the new network aren't compatible and will not work. Third-party tag manufacturers like Chipolo and Motorola are reportedly committing future releases to the new standard, which means the iOS feature will also detect forthcoming models.

Android devices have been capable of detecting Bluetooth trackers for some time, and Google is currently rolling out its long-awaited Find My Device upgrade to smartphones. Thanks to the Detecting Unwanted Location Trackers specification, it’ll work in conjunction with Apple’s network.

Part of iOS 17.5There is more to iOS 17.5 than just the security patch.

First, it’s introducing a dynamic wallpaper celebrating the LGBTQ+ community just in time for Pride Month. Second, the company is adding a new game called Quartile to Apple News Plus. It’s sort of like Scrabble, where you have to make up words using small groups of letters.

Moreover, Apple News Plus subscribers can download audio briefings, entire magazine issues, and more for offline enjoyment. When you're back online, the downloaded content list “will automatically refresh.”

Besides these, 9To5Mac has confirmed even more changes like the Podcasts widget receiving support for dynamic colors. This alters the color of the box to match a podcast’s artwork. The publication also confirms the existence of Repair State, a “special hibernation mode” that lets people send in their iPhone for service without disabling the Find My connection.

To install iOS 17.5, head over to your iPhone’s Settings menu. Go to General then Software Update to receive the patch. And be sure to check out TechRadar's list of the best iPhone for 2024.

You might also likeBiden Bans Chinese Bitcoin Mine Near U.S. Nuclear Missile Base

An investigation identified national security risks posed by a crypto facility in Wyoming. It is near an Air Force base and a data center doing work for the Pentagon.

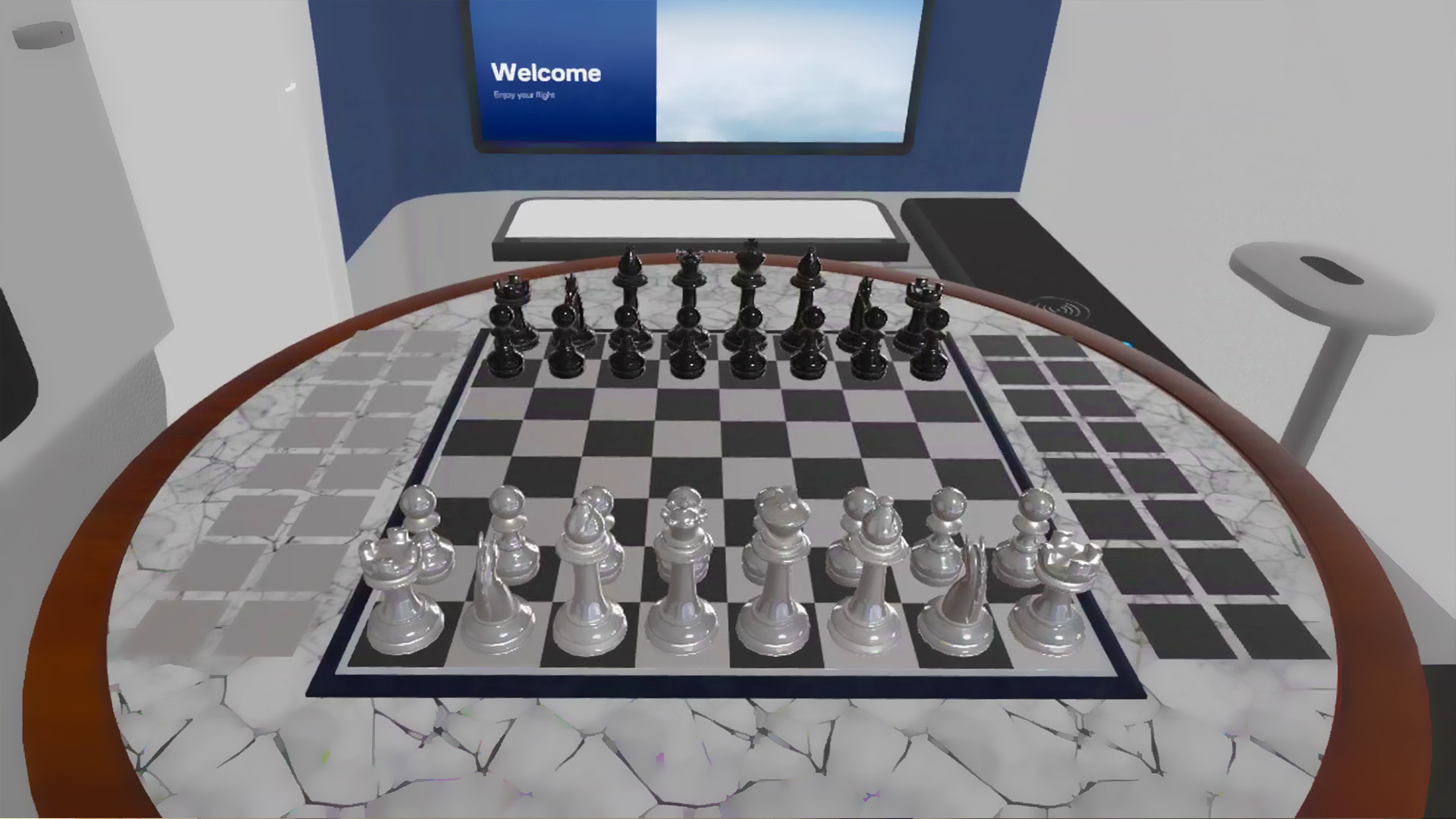

Meta Quest 3's new Travel Model lets you use the headset on a plane – and stop staring at the Vision Pro wearer in the next aisle

Augmented reality is taking to the skies as Meta is rolling out an experimental Travel Mode to its Quest 2 and Quest 3 headsets. Once enabled, users can enjoy content while on a plane, a function that wasn't possible due to certain components.

Sitting in a moving vehicle, such as a car or airplane, can confuse the internal measurement units (or IMUs, for short) and, as a result, cause the headset to have a hard time tracking your position.

But thanks to Travel Mode, you won’t have this problem. Meta says it fine-tuned the Quest headset's “algorithms to account for the motion of an airplane,” which delivers a much more stable experience while flying. It'll also level the playing field against the Apple Vision Pro, which has offered a travel mode since launch.

You connect the Quest 2 or 3 to a plane's Wi-Fi connection and access content from an external tablet or laptop or that is stored within the Quest library. Meta recommends double-checking if an app needs an internet connection to work, as inflight Wi-Fi can be rather spotty. This means that certain video games, among other content, may play worse.

As far as in-flight infotainment systems go, most will not be accessible, except for Lufthansa, thanks to a partnership between Meta and the German-based airline.

Meta's partnership with Lufthansa will provide unique content that is "designed to run on Quest 3 in Travel Mode.” These include interactive games like chess, meditation exercises, travel podcasts, and “virtual sightseeing previews”. That last one lets see what your destination is like right before you get there. However, this content will only be offered to people seated in Lufthansa’s Allegris Business Class Suite on select flights.

If you want to try out Travel Mode, you can activate it by going to the Experimental section on your Quest headset’s Settings menu. Enable the feature, and you're ready to use it. Once activated, you can toggle Travel Mode on or off anytime in Quick Settings. Meta plans to offer Travel Mode for additional modes of transportation like trains at some point, but a specific release date has not been announced.

A company representative told us Travel Mode is available to all users globally, although it's unknown when it'll leave its experimental state and become a standard feature. We asked if there are plans to expand the Lufthansa content to other airlines and travel classes like Economy. But they have nothing to share at the moment. Meta wants to keep their pilot program with Lufthansa for the time being, however they are interested in expanding.

If you're looking for recommendations on what to play on your next flight, check out TechRadar's list of the best Quest 2 games for 2024.

You might also likeSix major ChatGPT updates OpenAI unveiled at its Spring Update – and why we can't stop talking about them

OpenAI just held its eagerly-anticipated spring update event, making a series of exciting announcements and demonstrating the eye- and ear-popping capabilities of its newest GPT AI models. There were changes to model availability for all users, and at the center of the hype and attention: GPT-4o.

Coming just 24 hours before Google I/O, the launch puts Google's Gemini in a new perspective. If GPT-4o is as impressive as it looked, Google and its anticipated Gemini update better be mind-blowing.

What's all the fuss about? Let's dig into all the details of what OpenAI announced.

1. The announcement and demonstration of GPT-4o, and that it will be available to all users for free

The biggest announcement of the stream was the unveiling of GPT-4o (the 'o' standing for 'omni'), which combines audio, visual, and text processing in real time. Eventually, this version of OpenAI's GPT technology will be made available to all users for free, with usage limits.

For now, though, it's being rolled out to ChatGPT Plus users, who will get up to five times the messaging limits of free users. Team and Enterprise users will also get higher limits and access to it sooner.

GPT-4o will have GPT-4's intelligence, but it'll be faster and more responsive in daily use. Plus, you'll be able to provide it with or ask it to generate any combination of text, image, and audio.

The stream saw Mira Murati, Chief Technology Officer at OpenAI, and two researchers, Mark Chen and Barret Zoph, demonstrate GPT-4o's real-time responsiveness in conversation while using its voice functionality.

The demo began with a conversation about Chan's mental state, with GPT-4o listening and responding to his breathing. It then told a bedtime story to Barret with increasing levels of dramatics in its voice upon request – it was even asked to talk like a robot.

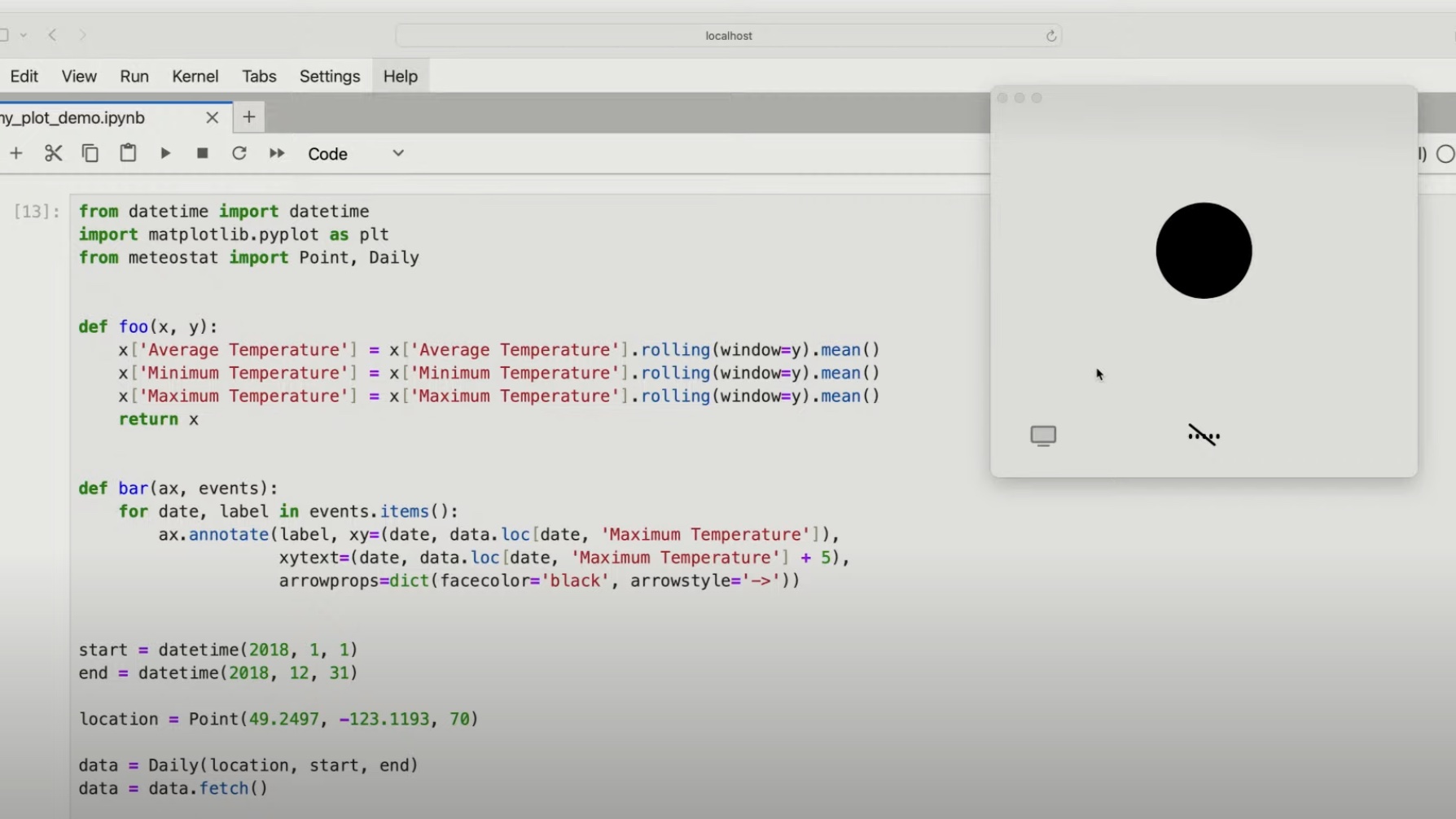

It continued with a demonstration of Barret "showing" GPT-4o a mathematical problem and the model guiding Barret through solving it by providing hints and encouragement. Chan asked why this specific mathematical concept was useful, which it answered at length.

They followed this up by showing GPT-4o some code, which it explained in plain English, and provided feedback on the plot that the code generated. The model talked about notable events, the labels of the axis, and a range of inputs. This was to show OpenAI's continued conviction to improving GPT models' interaction with code bases and the improvement of its mathematical abilities.

The penultimate demonstration was an impressive display of GPT-4o's linguistic abilities, as it simultaneously translated two languages – English and Italian – out loud.

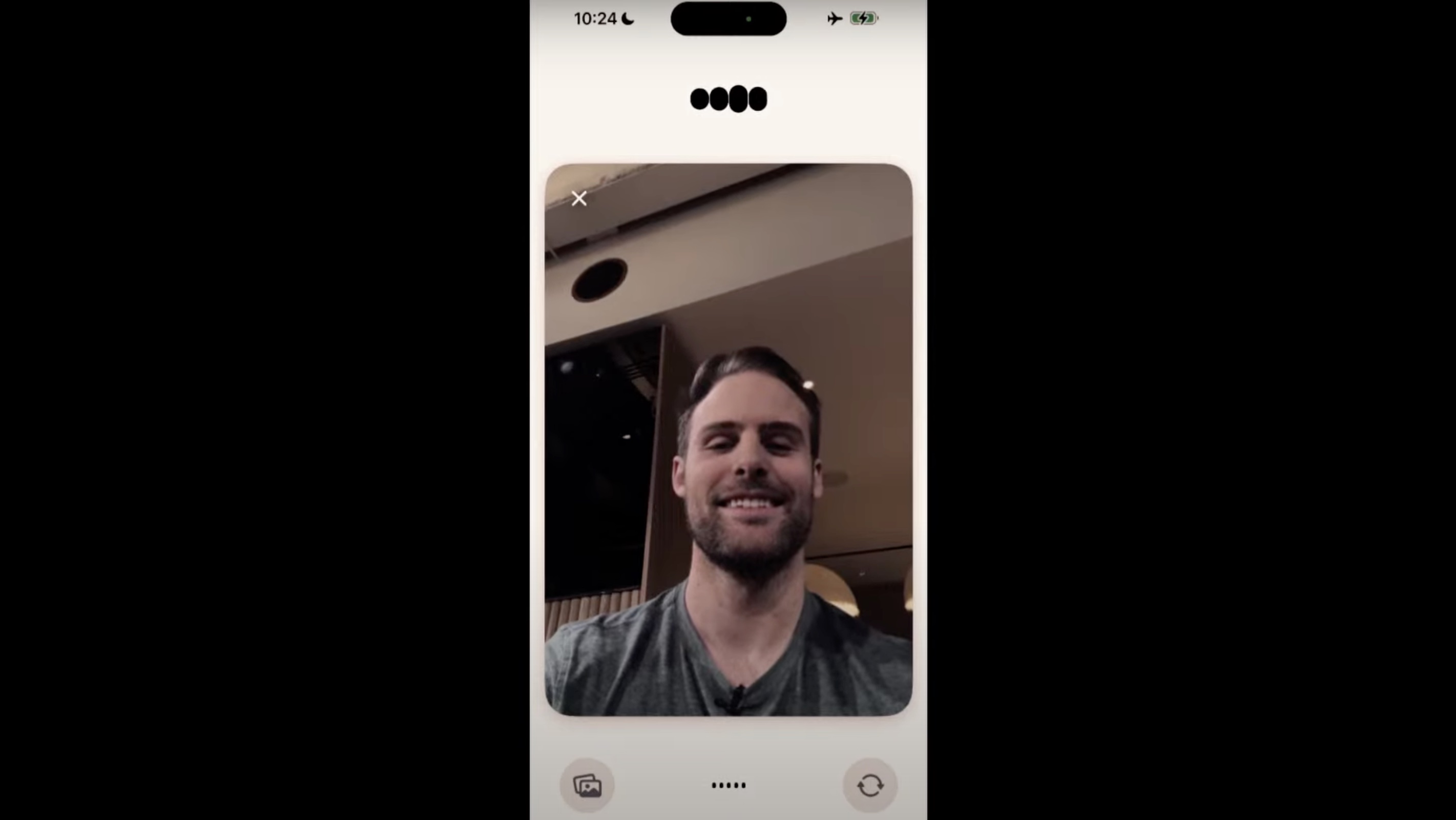

Lastly, OpenAI provided a brief demo of GPT-4o's ability to identify emotions from a selfie sent by Barret, noting that he looked happy and cheerful.

If the AI model works as demonstrated, you'll be able to speak to it more naturally than many existing generative AI voice models and other digital assistants. You'll be able to interrupt it instead of having a turn-based conversation, and it'll continue to process and respond - similar to how we speak to each other naturally. Also, the lag between query and response, previously about two to three seconds, has been dramatically reduced.

ChatGPT equipped with GPT-4o will roll out over the coming weeks, free to try. This comes a few weeks after Open AI made ChatGPT available to try without signing up for an account.

2. Free users will have access to the GPT store, the memory function, the browse function, and advanced data analysis

GPTs are custom chatbots created by OpenAI and ChatGPT Plus users to help enable more specific conversations and tasks. Now, many more users can access them in the GPT Store.

Additionally, free users will be able to use ChatGPT's memory functionality, which makes it a more useful and helpful tool by giving it a sense of continuity. Also being added to the no-cost plan are ChatGPT's vision capabilities, which let you converse with the bot about uploaded items like images and documents. The browse function allows you to search through previous conversations more easily.

ChatGPT's abilities have improved in quality and speed in 50 languages, supporting OpenAI’s aim to bring its powers to as many people as possible.

3. GPT-4o will be available in API for developers

OpenAI's latest model will be available for developers to incorporate into their AI apps as a text and vision model. The support for GPT-4o's video and audio abilities will be launched soon and offered to a small group of trusted partners in the API.

4. The new ChatGPT desktop app

OpenAI is releasing a desktop app for macOS to advance its mission to make its products as easy and frictionless as possible, wherever you are and whichever model you're using, including the new GPT-4o. You’ll be able to assign keyboard shortcuts to do processes even more quickly.

According to OpenAI, the desktop app is available to ChatGPT Plus users now and will be available to more users in the coming weeks. It sports a similar design to the updated interface in the mobile app as well.

5. A refreshed ChatGPT user interfaceChatGPT is getting a more natural and intuitive user interface, refreshed to make interaction with the model easier and less jarring. OpenAI wants to get to the point where people barely focus on the AI and for you to feel like ChatGPT is friendlier. This means a new home screen, message layout, and other changes.

6. OpenAI's not done yet

The mission is bold, with OpenAI looking to demystify technology while creating some of the most complex technology that most people can access. Murati wrapped up by stating that we will soon be updated on what OpenAI is preparing to show us next and thanking Nvidia for providing the most advanced GPUs to make the demonstration possible.

OpenAI is determined to shape our interaction with devices, closely studying how humans interact with each other and trying to apply its learnings to its products. The latency of processing all of the different nuances of interaction is part of what dictates how we behave with products like ChatGPT, and OpenAI has been working hard to reduce this. As Murati puts it, its capabilities will continue to evolve, and it’ll get even better at helping you with exactly what you’re doing or asking about at exactly the right moment.

You Might Also LikeOpenAI's GPT-4o ChatGPT assistant is more life-like than ever, complete with witty quips

So no, OpenAI didn’t roll out a search engine competitor to take on Google at its May 13, 2024 Spring Update event. Instead, OpenAI unveiled GPT-4 Omni (or GPT-4o for short) with human-like conversational capabilities, and it's seriously impressive.

Beyond making this version of ChatGPT faster and free to more folks, GPT-4o expands how you can interact with it, including having natural conversations via the mobile or desktop app. Considering it's arriving on iPhone, Android, and desktop apps, it might pave the way to be the assistant we've all always wanted (or feared).

OpenAI's ChatGPT-4o is more emotional and human-like

GPT-4o has taken a significant step towards understanding human communication in that you can converse in something approaching a natural manner. It comes complete with all the messiness of real-world tendencies like interrupting, understanding tone, and even realizing it's made a mistake.

During the first live demo, the presenter asked for feedback on his breathing technique. He breathed heavily into his phone, and ChatGPT responded with the witty quip, “You’re not a vacuum cleaner.” It advised on a slower technique, demonstrating its ability to understand and respond to human nuances.

So yes, ChatGPT has a sense of humor but also changes the tone of responses, complete with different inflections while conveying a "thought." Like human conversations, you can cut the assistant off and correct it, making it react or stop speaking. You can even ask it to speak in a certain tone, style, or robotic voice. Furthermore, it can even provide translations.

In a live demonstration suggested by a user on X (formerly Twitter), two presenters on stage, one speaking English and one speaking Italian, had a conversation with Chat GPT-4o handling translation. It could quickly deliver the translation from Italian to English and then seamlessly translate the English response back to Italian.

It’s not just voice understanding with GPT-4o, though; it can also understand visuals like a written-out linear equation and then guide you through how to solve it, as well as look at a live selfie and provide a description. That could be what you're wearing or your emotions.

In this demo, GPT said the presenter looked happy and cheerful. It’s not without quirks, though. At one point, ChatGPT said it saw the image of the equation before it was even written out, referring back to a previous visual of just a wooden tabletop.

Throughout the demo, ChatGPT worked quickly and didn't really struggle to understand the problem or ask about it. GPT-4o is also more natural than typing in a query, as you can speak naturally to your phone and get a desired response – not one that tells you to Google it.

A little like "Samantha" in "Her"If you’re thinking about Her or another futuristic-dystopian film with an AI, you’re not the only one. Speaking with ChatGPT in such a natural way is essentially the Her moment for OpenAI. Considering it will be rolling out to the mobile app and as a desktop app for free, many people may soon have their own Her moments.

The impressive demos across speech and visuals may only be scratching the surface of what's possible. Overall performance and how well GPT-4o performs day-to-day in various environments remain to be seen, and once available, TechRadar will be putting it through the test. Still, after this peek, it's clear that GPT-4o is preparing to take on the best Google and Apple have to offer in their eagerly-anticipated AI reveals.

The outlook on GPT-4oHowever, announcing this the day before Google I/O kicks off and just a few weeks after we’ve seen new AI gadgets hit the scene – like the Rabbit R1 – OpenAI is giving us a taste of the truly useful AI experiences we want. If this rumored partnership with Apple comes to fruition, Siri could be supercharged, and Google will almost certainly show off its latest AI tricks at I/O on May 14, 2024. But will they be enough?

We wish OpenAI showed off a bit more live demos with the latest ChatGPT-4o in what turned out to be a jam-packed, less-than-30-minute keynote. Luckily, it will be rolling out to users in the coming week, and you won’t have to pay to try it out.

@techradar ♬ original sound - TechRadar You Might Also LikeAds in Windows 11 are becoming the new normal and look like they’re headed for your Settings home page

Microsoft looks like it’s forging ahead with its mission to put more ads in parts of the Windows 11 interface, with the latest move being an advert introduced to the Settings home page.

Windows Latest noticed the ad, which is for the Xbox Game Pass, is part of the latest preview release of the OS in the Dev channel (build 26120). For the uninitiated, the Game Pass is Microsoft’s subscription service that grants you access to a host of games for a monthly or yearly subscription fee.

Not every tester will see this advert, though, at least for now, as it’s only rolling out to those who have chosen the option to ‘Get the latest updates as soon as they're available’ (and that’s true of the other features delivered by this preview build). Also, the ad only appears for those signed into a Microsoft account.

Furthermore, Microsoft explains in a blog post introducing the build that the advert for the Xbox Game Pass will only appear to Windows 11 users who “actively play games” on their PC. The other changes provided by this fresh preview release are useful, too, including fixes for multiple known issues, some of which are related to performance hiccups with the Settings app.

While I can see this fresh advertising push won’t play well with Windows 11 users, Windows Latest did try the new update and reports that it’s a significant improvement on the previous version of 24H2. So that’s good news at least, and the tech site further observes that there’s a solution for an installation failure bug in here (stop code error ‘0x8007371B’ apparently).

Windows 11 24H2 is yet to roll out officially for all users, but it’s expected to be the pre-installed operating system on the new Snapdragon X Elite PCs that are scheduled to be shipped in June 2024. A rollout to all users on existing Windows 11 devices will happen several months later, perhaps in September or October.

I’m not the biggest fan of Microsoft’s strategy regarding promoting its own services - and indeed outright ads as is the case here - or the firm’s efforts to push people to upgrade from Windows 10 to Windows 11. Unfortunately, come next year, Windows 10 users will be facing a choice of migrating to Windows 11, or losing out on security updates when support expires for the older OS (in October 2025). That is, if they can upgrade at all - Windows 11’s hardware requirements make this a difficult task for some older PCs.

I hope for my sake personally, and for all Windows 11 users, that Microsoft considers showing that it values us all by not subjecting us to more and more adverts creeping into different parts of the operating system.

YOU MIGHT ALSO LIKEOn Instagram, a Jewelry Ad Draws Solicitations for Sex With a 5-Year-Old

Advertisers of merchandise for young girls find that adult men can become their unintended audience. In a test ad, convicted sex offenders inquired about a child model.

OpenAI’s big launch event kicks off soon – so what can we expect to see? If this rumor is right, a powerful next-gen AI model

Rumors that OpenAI has been working on something major have been ramping up over the last few weeks, and CEO Sam Altman himself has taken to X (formerly Twitter) to confirm that it won’t be GPT-5 (the next iteration of its breakthrough series of large language models) or a search engine to rival Google. What a new report, the latest in this saga, suggests is that OpenAI might be about to debut a more advanced AI model with built-in audio and visual processing.

This AI model could be revealed imminently in the company's 'Spring Update' event, which kicks off soon at 10am PT / 1pm ET / 6pm BST – here's how you can tune in to watch OpenAI's event.

OpenAI is towards the front of the AI race, striving to be the first to realize a software tool that comes as close as possible to communicating in a similar way to humans, being able to talk to us using sound as well as text, and also capable of recognizing images and objects.

The report detailing this purported new model comes from The Information, which spoke to two anonymous sources who have apparently been shown some of these new capabilities. They claim that the incoming model has better logical reasoning than those currently available to the public, being able to convert text to speech. None of this is new for OpenAI as such, but what is new is all this functionality being unified in the rumored multimodal model.

A multimodal model is one that can understand and generate information across multiple modalities, such as text, images, audio, and video. GPT-4 is also a multimodal model that can process and produce text and images, and this new model would theoretically add audio to its list of capabilities, as well as a better understanding of images and faster processing times.

The Information describes Altman’s vision for OpenAI’s products in the future as involving the development of a highly responsive AI that performs like the fictional AI in the film “Her.” Altman envisions digital AI assistants with visual and audio abilities capable of achieving things that aren’t possible yet, and with the kind of responsiveness that would enable such assistants to serve as tutors for students, for example. Or the ultimate navigational and travel assistant that can give people the most relevant and helpful information about their surroundings or current situation in an instant.

The tech could also be used to enhance existing voice assistants like Apple’s Siri, and usher in better AI-powered customer service agents capable of detecting when a person they’re talking to is being sarcastic, for example.

According to those who have experience with the new model, OpenAI will make it available to paying subscribers, although it’s not known exactly when. Apparently, OpenAI has plans to incorporate the new features into the free version of its chatbot, ChatGPT, eventually.

OpenAI is also reportedly working on making the new model cheaper to run than its most advanced model available now, GPT-4 Turbo. The new model is said to outperform GPT-4 Turbo when it comes to answering many types of queries, but apparently it’s still prone to hallucinations, a common problem with models such as these.

The company is holding an event today at 10am PT / 1pm ET / 6pm BST (or 3am AEST on Tuesday, May 14, in Australia), where OpenAI could preview this advanced model. If this happens, it would put a lot of pressure on one of OpenAI’s biggest competitors, Google.

Google is holding its own annual developer conference, I/O 2024, on May 14, and a major announcement like this could steal a lot of thunder from whatever Google has to reveal, especially when it comes to Google’s AI endeavor, Gemini.

YOU MIGHT ALSO LIKEU.S. Awards $120 Million to Polar Semiconductor to Expand Chip Facility

The grant is the latest federal award in a series stemming from the CHIPS and Science Act meant to ramp up domestic production of vital semiconductors.

At Art Auctions Market Seeks Its Footing After Stumbling Sales and a Hack at Christie’s

Speculation drove art prices to new heights during the pandemic, but declining sales and a cyberattack ignited new worries.

Elon Musk’s Diplomacy: Woo Right-Wing World Leaders. Then Benefit.

Mr. Musk has built a constellation of like-minded heads of state — including Argentina’s Javier Milei and India’s Narendra Modi — to push his own politics and expand his business empire.

Patient Dies Weeks After Kidney Transplant From Genetically Modified Pig

Richard Slayman received the historic procedure in March. The hospital said it had “no indication” his death was related to the transplant.

OpenAI has big news to share on May 13 – but it's not announcing a search engine

OpenAI has announced it's got news to share via a public livestream on Monday, May 13 – but, contrary to previous rumors, the developer of ChatGPT and Dall-E apparently isn't going to use the online event to launch a search engine.

In a social media post, OpenAI says that "some ChatGPT and GPT-4 updates" will be demoed at 10am PT / 1pm ET / 6pm BST on Monday May 13 (which is Tuesday, May 14 at 3am AEST for those of you in Australia). A livestream is going to be available.

OpenAI CEO Sam Altman followed up by saying the big reveal isn't going to be GPT-5 and isn't going to be a search engine, so make of that what you will. "We've been hard at work on some new stuff we think people will love," Altman says. "Feels like magic to me."

Rumors that OpenAI would be taking on Google directly with its own search engine, possibly developed in partnership with Microsoft and Bing, have been swirling for months. It sounds like it's not ready yet though – so we'll have to wait.

OpenAI, Google, and Applenot gpt-5, not a search engine, but we’ve been hard at work on some new stuff we think people will love! feels like magic to me.monday 10am PT. https://t.co/nqftf6lRL1May 10, 2024

See moreAI chatbots such as Microsoft Copilot already do a decent job of pulling up information from the web – indeed, at their core, these Large Language Models (LLMs) are essentially training themselves on websites in a similar way to how Google indexes them.

It's possible that the future of web search is not a list of links but rather an answer from an AI, based on those links – which raises the question of how websites could carry on getting the revenue they need to supply LLMs with information in the first place. Google itself has also been experimenting with AI in its search results.

In other OpenAI news, according to Mark Gurman at Bloomberg, Apple has "closed in" on a deal to inject some ChatGPT smarts into iOS 18, due later this year. The companies are apparently now "finalizing terms" on the deal.

However, Gurman says that a deal between Apple and Google to use Google's Gemini AI engine is still on the table too. We know that Apple is planning to go big on AI this year, though it sounds as though it may need some help along the way.

You might also likeAndroid users may soon have an easier, faster way to magnify on-screen elements

As we inch closer to the launch of Android 15, more of its potential features keep getting unearthed. Industry insider Mishaal Rahman found evidence of a new camera extension called Eyes Free to help stabilize videos shot by third-party apps.

Before that, Rahman discovered another feature within the Android 15 Beta 1.2 update relating to a fourth screen magnification shortcut referred to as the “Two-finger double-tap screen” within the menu.

What it does is perfectly summed up by its name: quickly double-tapping the screen with two fingers lets you zoom in on a specific part of the display. That’s it. This may not seem like a big deal initially, but it is.

As Rahman explains, the current three magnification shortcuts are pretty wonky. The first method requires you to hold down on an on-screen button, which is convenient but causes your finger to obscure the view and only zoom into the center. The second method has you hold on both the volume buttons, which frees up the screen but takes a while to activate.

The third method is arguably the best one—tapping the phone display three times lets you zoom into a specific area. However, doing so causes the Android device to slow down, so it's not instantaneous. Interestingly enough, the triple-tap method warns people of the performance drop.

This warning is missing on the double-tap option, indicating the zoom is near instantaneous. Putting everything together, you can think of double-tap as the Goldilocks option. Users can control where they want the software to focus on without experiencing any slowdown.

Improved accessibilityAt least, it should be that fast and a marked improvement over the triple tap. Rahman states in his group’s time testing the feature, they noticed a delay when zooming in. He chalks this up to the unfinished state of the update, although soon after admits that the slowdown could simply be a part of the tool and may be an unavoidable aspect of the software.

It’ll probably be a while until a more stable version of the double-tap method becomes widely available. If you recall, Rahman and his team could only view the update by manually toggling the option themselves. As far as we know, it doesn’t even work at the moment.

Double-tap seems to be one of the new accessibility features coming to Android 15. There are several in the works, such as the ability to hide “unused notification channels” to help people manage alerts and forcing dark mode on apps that normally don’t support it.

While we have you, be sure to check out TechRadar's round up of the best Android phones for 2024.

You might also likeApple Will Revamp Siri to Catch Up to Its Chatbot Competitors

Apple plans to announce that it will bring generative A.I. to iPhones after the company’s most significant reorganization in a decade.

Your iPhone may soon be able to transcribe recordings and even summarize notes

It’s no secret that Apple is working on generative AI. No one knows what it’ll all entail, but a new leak from AppleInsider offers some insight. The publication recently spoke to “people familiar with the matter,” claiming Apple is working on an “AI-powered summarization [tool] and greatly enhanced audio transcription” for multiple operating systems.

The report states these should bring “significant improvements” to staple iOS apps like Notes and Voice Memos. The latter is slated to “be among the first to receive upgraded capabilities,” namely the aforementioned transcriptions. They’ll take up a big portion of the app’s interface, replacing the graphical representation for audio recordings. AppleInsider states it functions similarly to Live VoiceMail on iPhone, with a speech bubble triggering the transcription and the text appearing right on the screen.

New summarizing toolsAlongside VoiceMemos, the Notes app will apparently also get some substantial upgrades. It’ll gain the ability to record audio and provide a transcription for them, just like Voice Memo. Unique to Notes though, is the summarization tool, which will provide “a basic text summary” of all the important points in a given note.

Safari and Messages will also receive their own summarization features, although they’ll function differently. The browser will get a tool that creates short breakdowns for web pages, while in Messages, the AI provides a recap of all your texts. It’s unknown if the Safari update will be exclusive to iPhone or if the macOS version will get the same capability, but there’s a chance it could.

Apple, at the time of this writing, is reportedly testing these features for an upcoming release on iOS 18 later this year. According to the report, there are plans to update the corresponding apps with the launch of macOS 15 and iPadOS 18; both of which are expected to come out in 2024.

Extra power neededIt’s important to mention that there are conflicting reports on how these AI models will work. AppleInsider claims certain tools will run “entirely on-device” to protect user privacy. However, a Bloomberg report says some of the AI features on iOS 18 will instead be powered by a cloud server equipped with Apple's M2 Ultra chip, the same hardware found on 2023’s Mac Studio.

The reason for the cloud support is that “complicated jobs” like summarizing articles require extra computing power. iPhones by themselves, may not have the ability to run everything internally.

Regardless of how the company implements its software, it could help Apple catch up to its AI rivals. Samsung’s Galaxy S24 has many of these AI features already. Plus, Microsoft’s OneNote app can summarize information thanks to Copilot. Of course, take all these details with a grain of salt. Apple could always change things at the last minute.

Be sure to check out TechRadar's list of the best iPhones for 2024 to see which ones "reign supreme".

You might also like- Apple’s WWDC 2024 gets official date for iOS 18 news and more – here’s what to expect

- iTunes for Windows 11 gets a fresh update with a vital security fix, and it brings in support for new iPad Air and iPad Pro

- Apple scraps iPad Pro 'Crushed' ad and issues rare apology – and it was the right thing to do

California Will Add a Fixed Charge to Electric Bills and Reduce Rates

Officials said the decision would lower bills and encourage people to use cars and appliances that did not use fossil fuels, but some experts said it would discourage energy efficiency.

Christie’s Website Is Brought Down by Hackers Days Before $840 Million Auctions

The auctioneer’s website was taken offline on Thursday evening and remained down on Friday, days before its spring auctions were set to begin.

Microsoft could turbocharge Edge browser’s autofill game by using AI to help fill out more complex forms

Microsoft Edge looks like it’s getting a new feature that could help you fill out forms more easily thanks to a boost from GPT-4 (the most up-to-date large language model from the creators of ChatGPT, OpenAI).

Browsers like Edge already have auto-fill assistance features to help fill out fields asking for personal information that’s requested frequently, and this ability could see even more improvement thanks to GPT-4’s technology.

The digital assistant currently on offer from Microsoft, Copilot, is also powered by GPT-4, and has seen some considerable integration into Edge already. In theory, the new GPT-4 driven form-filling feature will help Edge users tackle more complex or unusual questions, rather than typical basic fields (name, address, email etc) that existing auto-fill functionality handles just fine.

However, right now this supercharged auto-fill is a feature hidden within the Edge codebase (it’s called “msEdgeAutofillUseGPTForAISuggestions”), so it’s not yet active even in testing. Windows Latest did attempt to activate the new feature, but with no luck - so it’s yet to be seen how the feature works in action.

Of course, as noted, Edge’s current auto-fill feature is sufficient for most form-filling needs, but that won’t help with form fields that require more complex or longer answers. As Windows Latest observes, what you can do, if you wish, is just paste those kind of questions directly into Edge’s Copilot sidebar, and the AI can help you craft an answer that way. Furthermore, you could also experiment with different conversation modes to obtain different answers, perhaps.

This pepped-up auto-fill could be a useful addition for Edge, and Microsoft is clearly trying to develop both its browser, and the Copilot AI itself, to be more helpful and generally smarter.

That said, it’s hard to say how much Microsoft is prioritizing user satisfaction, as equally, it’s implementing measures which are set to potentially annoy some users. We’re thinking about its recent aggressive advertising strategy and curbing of access to settings if your copy of Windows is unactivated, to pick a couple of examples. Not forgetting the quickly approaching deprecation date for Windows 10 (its most popular operating system).

Copilot was presented as an all-purpose assistant, but the AI still leaves a lot to be desired. However, it’s gradually seeing improvements and integration into existing Microsoft products, and we’ll have to see if the big bet on Copilot pans out as envisioned.

YOU MIGHT ALSO LIKE