Do NOT follow this link or you will be banned from the site!

Feed aggregator

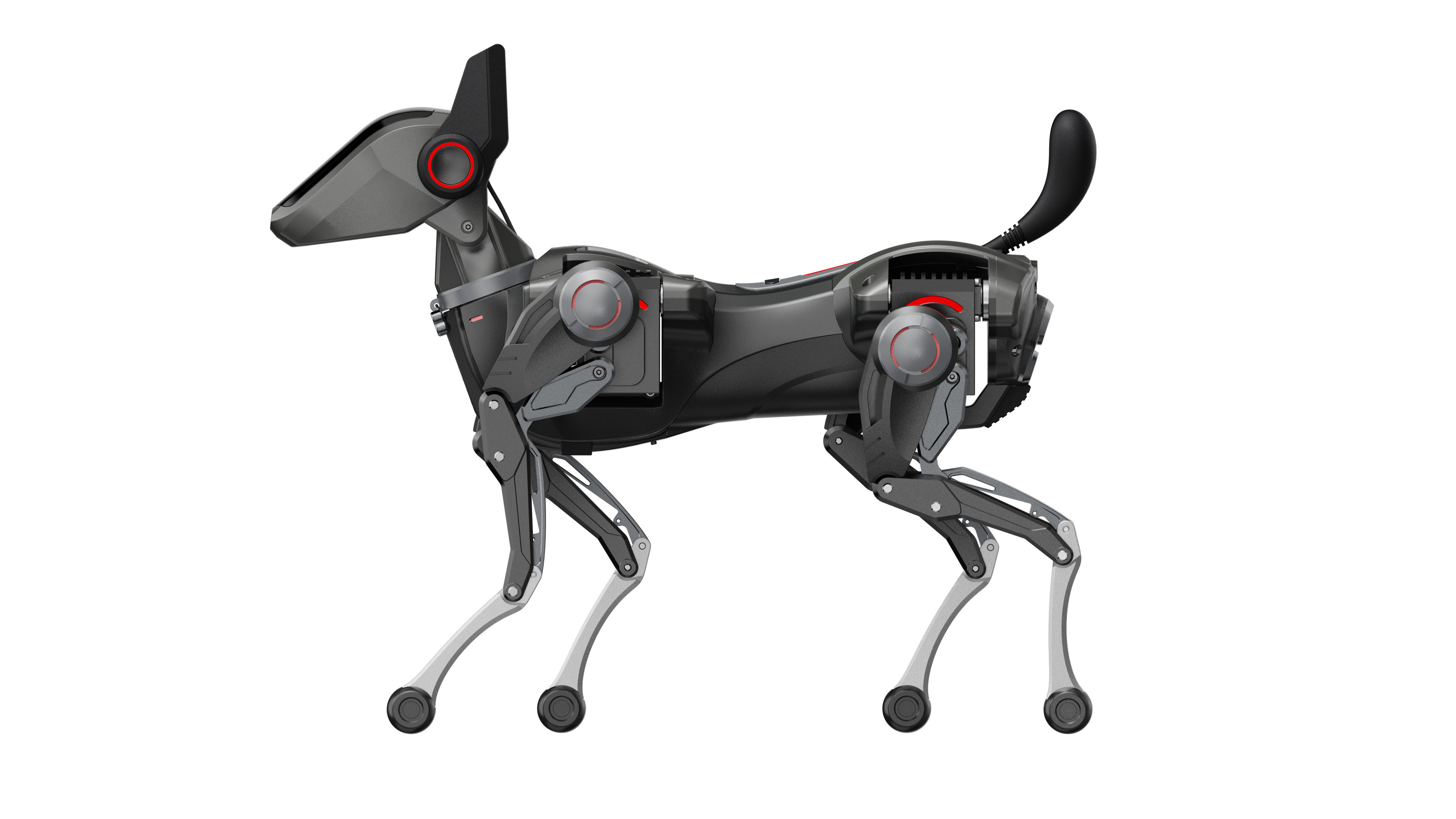

Would you swap your real-life pet for an AI-powered alternative? Meet Sirius the robot dog and decide for yourself

- Meet Sirius, an AI-based programmable and customizable robotic dog companion

- You can train Sirius to perform tricks and upload different voice packs

- Its available worldwide, with pre-orders starting today

From early sci-fi heroes like K9 in Dr Who, or Muffit in the original Battlestar Galactica, robot dogs have long been a staple of TV and films, but despite the predictions of every futurist from the last 30 to 50 years, household robots or robot pets still haven’t become commonplace in 2025, unless you count the best robot vacuum cleaners.

The history of robo-dogs goes back a long way. Sony was one of the first companies to really get into making robot dogs with its Aibo range, which was first released in 1999 in Japan, but, while still for sale ($2,899.99!), seems to have gone quiet of late.

There have been others since then, like the Minted Dog-E, but with all the advancements in AI that are going on at the moment, it seems inevitable that intelligent robotic dogs would be making a comeback.

The latest robo dog to try to nuzzle its way into our lives is Sirius, from Hengbot. Sirius is an AI-based programmable and customizable robotic dog designed to become your faithful digital pal.

Emotionally intelligentIt’s the AI that really makes Sirius different from previous robo dogs. Described as "emotionally intelligent”, Sirius has its own personality and can mimic the movements of real dogs like jumping, stretching, and even dancing.

Sirius can handle voice recognition, natural language processing, and image recognition. It can even understand your gestures. You can teach it tricks, sync gestures with voice commands, and even upload custom facial expressions.

At roughly 10 inches tall, Sirius is about the size of a chihuahua. It’s not too heavy either, with a 1KG frame constructed from aerospace-grade alloy for fast, responsive actions. Inside its AI brain, Sirius has up to 5 TOPS of edge computing power and comes with USB-C peripheral expansion and a powerful 2250mAh battery that offers a promised 40-to-60 minutes of play time.

“With Sirius, we didn’t just build a robot, we created the first of a new kind of robotic species,” said Peiheng Song, CEO at Hengbot. “Powered by our Neurocore system, Sirius marks the start of a growing universe of intelligent, customizable robots designed to bring your imagination to life.”

Sirius is designed to be easy to customize and program, whether you’re a curious kid, first-time robot owner, or a tech-savvy developer.

It utilizes a game-like visual editor that you can use to choreograph dance routines, teach Sirius custom tricks, or train, with no coding required.

What do you think? Is Sirius the sort of robot you'd be happy to have roaming around your home? Let us know in the comments below.

Sirius is now available for pre-order at Hengbot.com with a basic model starting at $1299 with free worldwide shipping. General availability is expected in Fall 2025.

You might also like- Viral philanthropist Simon Squibb has downloaded all his knowledge into an AI chatbot that you can run on your phone, for free!

- Google’s new Gemini AI model means your future robot butler will still work even without Wi‑Fi

- Are you worried about AI? It's about to get worse as study shows people now speak like ChatGPT

Google AI Pro's new annual subscription brings you big savings – here's how it compares to ChatGPT

- You can now pay annually for Google AI Pro for the first time

- The overall price is the equivalent of getting two months for free

- As yet, ChatGPT doesn't offer annual subscription options

Good news if you're fully committed to Google Gemini AI, and you like saving money: the Google AI Pro subscription can now be purchased annually as well as monthly, and you 'll save yourself a chunk of money if you pay year-to-year.

As spotted by 9to5Google, you can now pay $199.99 for a year of AI goodness, instead of the existing $19.99 a month option – with the latter working out as $239.88 over the 12 months ($39.89 more than an annual plan).

In the UK, your options are £18.99 a month or £189.99 a year (saving you £37.89). It's not immediately clear if the deal is the same in Australia, but there the monthly fee is AU$32.99 – so presumably you'd be looking at AU$329.99 a year (saving AU$65.89). Essentially, you're getting two months free if you pay for a year in advance.

Of course, the downside of annual plans is that you're committed for a full year, so you can't opt out whenever you like – which is why you'll often see annual plans available for less overall, in all the places where you're signing up for a subscription.

How does ChatGPT compare?Subscription

Monthly price

Annual price

Google AI Pro

$19.99 / £18.99 / AU$32.99

$199.99 / £189.99 (Aus TBC)

Google AI Ultra

$249.99 / £234.99 / AU$409.99

Not available

ChatGPT Plus

$20 / £20 (about AU$30.58)

Not available

ChatGPT Pro

$200 / £200 (about AU$306)

Not available

ChatGPT Plus remains at $20 / £20 per month (AU$30.58 at current rates). There's no option to pay annually as yet, and you can also put up $200 / £200 (about AU$306) per month for the top-tier ChatGPT Pro plan, with fewer limits and more features.

For comparison purposes, Google's own super-charged, pro-level plan is Google AI Ultra, and that's going to set you back $249.99 / £234.99 / AU$409.99 per month. At the time of writing, there's no option to pay annually (perhaps understandably), though you do get the first three months for half price if you want to give it a try.

All of which means there's not too much to choose between the monthly costs of Gemini and ChatGPT, when it comes to the cheaper plans that most people are going to sign up for – but if you want to pay annually and save, that's only possible on the Google service.

We'll have to wait and see whether ChatGPT responds with an annual plan of its own. It's also worth mentioning that you can use both Gemini and ChatGPT for free, but with a limited set of features and more restrictions on your usage.

You might also likeA.I. Is Starting to Wear Down Democracy

Content generated by artificial intelligence has become a factor in elections around the world. Most of it is bad, misleading voters and discrediting the democratic process.

Viral philanthropist Simon Squibb has downloaded all his knowledge into an AI chatbot that you can run on your phone, for free!

- New app from Simon Squibb promises free AI advice

- There are versions for iOS and Android

- You can call AI Simon for a voice chat, too

Simon Squibb, the serial entrepreneur, viral philanthropist and author of the #1 Sunday Times bestselling book What's Your Dream? has just released a new app that distils his 30 years of entrepreneurial knowledge into an AI chatbot that you can talk to for free.

So, if you feel like you need some advice on how to turn your dreams into reality, you’ll now have an AI version of Squibb in your pocket that you can turn to any time you like.

Talking about the new app, Squibb said: “I’ve spent the last five years giving away money, mentorship, and advice to strangers on the street and sharing those moments online so millions can learn from them. But now I want to scale that impact even further. This app gives anyone, anywhere, the chance to get the same help. AI built with my brain that gives you 1-1 access to me, anytime you need it.”

Available on Android and iOS, the app called ‘What’s your dream?’ can be downloaded from your device’s app store right now.

Staircase to heavenThe app starts off by asking you what your dream is, with the example of ‘having my own coffee shop’ presented. You’re then into a chat with AI Simon, with the added benefit of three suggested questions you could ask him.

If you want to make things even more personal you can tap the ‘Call Simon’ button and have a voice chat with the AI version of Simon.

I tried it, and it worked well. I asked AI Simon how many holidays he’d recommend a year, and it understood me perfectly and provided a thoughtful answer. It was very much like chatting to ChatGPT, except it’s free to use.

Squibb has built a significant online presence by nurturing new businesses. He famously transformed a London staircase into a unique venue for aspiring entrepreneurs to pitch ideas symbolizing his commitment to fostering talent.

Beyond this, Squibb boasts 16 million social media followers. His viral videos have garnered billions of views and millions of likes/shares due to their engaging, motivational content, like this YouTube video on how to quit your job:

Investing in the futureSquibb actively supports entrepreneurs, having invested over £300,000 in start-ups.

Regarding his decision to make an AI app, Squibb says: “As someone who speaks to businesses day in and day out, I understand how hot the AI conversation is. Some hate it, some love it. Will it take people's jobs? Definitely. But if used in the right way it can also create more dreams and opportunities than ever before. That’s why I wanted to create this tool. To arm people with something accessible that will help them build towards their dreams, rather than take away from them.”

The app was developed by the team at HelpBnk, the free mentorship platform Squibb founded in 2023 to “democratize access to advice and opportunity”.

Squibb continues: “I’m really hopeful about the possibilities that AI will create. But a hammer is only useful if you have a vision of the home you’re going to build. This is the same. My app is a tool. But the dream? That has to come from you.”

You might also likeAre you worried about AI? It's about to get worse as study shows people now speak like ChatGPT

- A new study shows people sometimes sound a lot like ChatGPT when they speak

- The evidence is in their vocabulary and phrasing

- This shift could flatten emotional nuance and have everyone sound the same

Have you recently heard a TED Talk, or perhaps from a friend who teaches at a college, tell you about their plan to delve into a new realm and encourage you to be more adept at some activity? There's a chance they've been possessed by the spirit of ChatGPT. Or maybe just spent a lot of time interacting with AI chatbots.

Researchers at the Max Planck Institute for Human Development think the latter is becoming a real trend. They've released a new report indicating that a linguistic shift has begun in the wake of ChatGPT's release. Academics and other lecture-adjacent people are starting to sound like AI, their speech peppered with some of the same words that occur far more often in AI-produced text than average, like meticulous, adept, delve, and realm.

The researchers analyzed 280,000 academic YouTube videos across more than 20,000 channels. The change was easy to spot, with some of the words popping up more than 50 percent more often than would be expected. And these aren't AI-written scripts, it's just educated people inadvertently pulling from the AI dictionary. Whether they are using em dashes is harder to tell, but they may well be hidden among the words.

I should also say that someone using those words doesn't mean they are being influenced by AI writing. I can point to writing of mine going back decades that uses all of the examples of AI vocabulary precisely because they feel evocative and interesting.

AI thesaurusIt might seem like a minor issue, but it might portend a potentially deeper problem. The researchers found the AI-influenced words weren’t just more frequent, they were replacing more vivid, less structured language. What once might have been a passionate, complex argument would become dull and antiseptic. Sanding the texture off our language and always defaulting to the phrases used by AI could, at its worst, reduce the color, emotion, and regional quirks that enliven how we speak. Linguistic diversity doesn’t thrive on autocomplete.

It could even mean a decline in our manners. There's a debate about whether it's worth being polite to AI chatbots. Should you say “please” to ChatGPT or thank you to Gemini? Conversation is conversation. If we are brusque with AI enough, it will bleed into how we speak to other humans, and the world might feel a little less friendly.

At the same time, it’s hard to resist completely. If you’re an academic trying to write a paper or a content manager trying to meet a deadline, ChatGPT can be a useful co-author. It writes cleanly and is often direct and even incisive in its analysis. But the tradeoff is a voice that’s often monotonous in long-form, no matter the prompt. And if you rely on it too often, that voice becomes yours.

It’s worth noting that we’ve seen this pattern before. Technology has always shaped language. The telegraph encouraged brevity, and telephones made "hello" the standard greeting. Texting gave us LOL and ROFL. Twitter had us saying "hashtag" out loud, while emojis have people saying "upside down smiley face" in actual conversation. We’re emulating something not because it’s natural, but because it’s what we’re now trained to expect.

It's hard to miss the irony of creating an AI chatbot to mimic humans, only to have humans start mimicking AI. Odd as it is to contemplate, you may have to pay attention to how you speak and the words you use lest you fall into the vocabulary your AI pal uses. Delve into the meticulous research on what makes your language unique and become adept in the realm of uncommon idioms.

You might also likePages

- « first

- ‹ previous

- 1

- 2

- 3

- 4